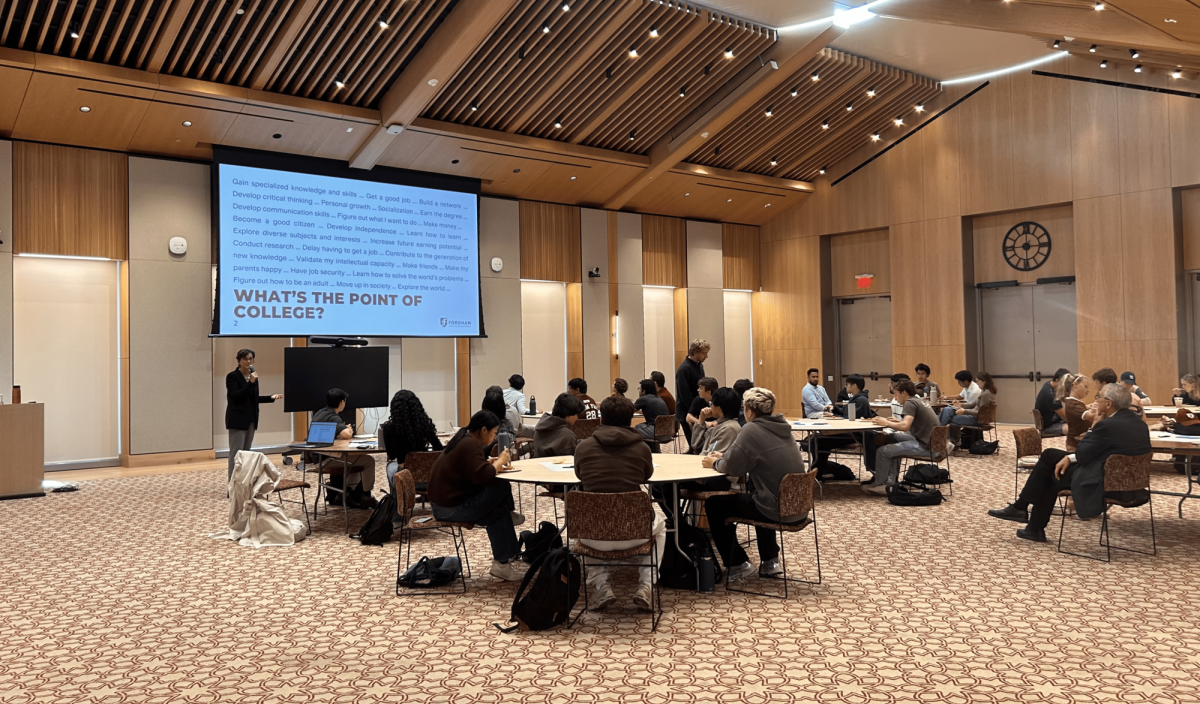

Since the launch of ChatGPT at the end of 2022, artificial intelligence (AI) has had an explosive introduction into everyday life. With it, industries, institutions and individuals have been forced to consider the implications of such technology, especially in academic settings. Over the summer, Fordham held a strategizing session, aimed at determining the correct way to handle AI use in the classroom. The report was sent out to instructors, with suggestions and sample language for those who wished to mention AI policies in fall semester syllabuses. The report makes room for three approaches to AI in the classroom: no AI, limited AI and full AI. In the latter kind of class, Fordham suggests students be required to indicate when something is generated by AI.

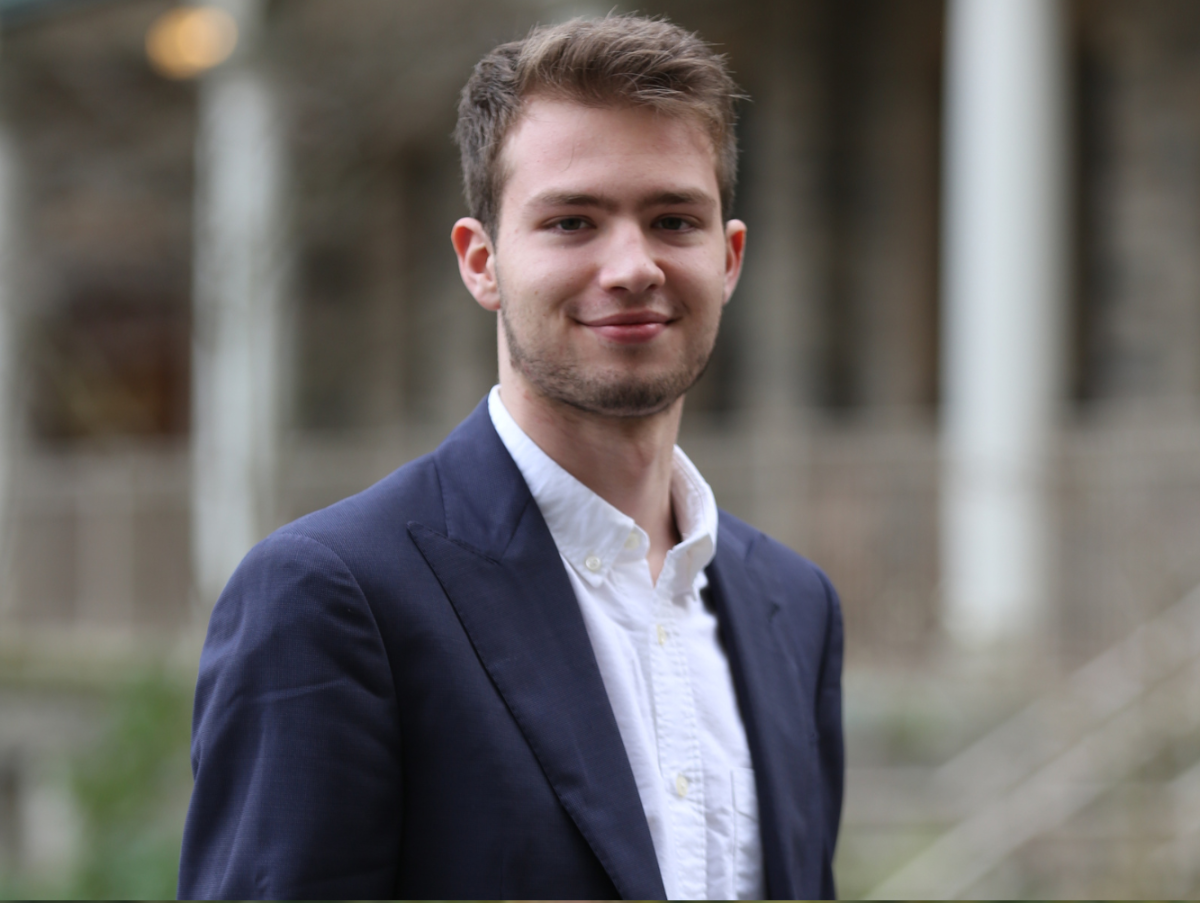

For the time being, Fordham does not have a university-wide policy on AI. According to Dennis Jacobs, provost and senior vice president for Academic Affairs, such a policy is “unlikely to be able to address the vast array of situations where AI may appear in Fordham’s future.” However, that is not to say that AI may be used for any purpose without repercussions.

Fordham Law School has modified its honor code to explicitly prohibit students from using AI on exams. At the undergraduate level, however, policies on AI are left mostly in the hands of instructors, and for this reason the consequences for using it (or lack thereof) vary.

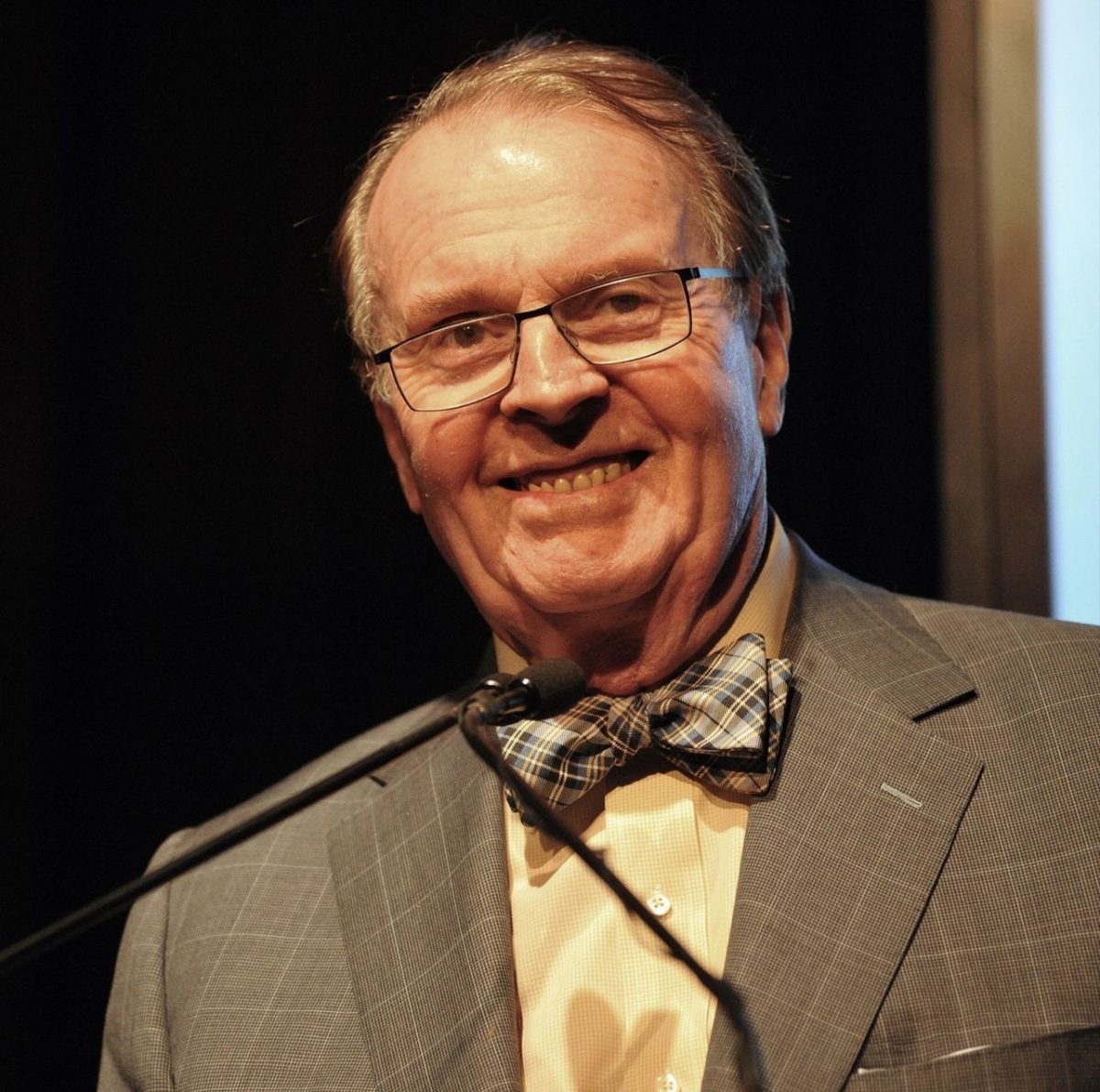

Professor Cathryn Prince defines her policy as “the middle road.” She favors the second of the three options — limited AI use in the classroom — and appreciates the novel technology as an important tool, both inside and out of an academic setting. Prince contends that AI will have a large role in the near-future, and as such, she feels it benefits her students to allow it (with restrictions) in her classroom, claiming “[AI] is going to be a part of your job and day-to-day life, so the more we know how to use it, the better.” At the same time, Prince recognizes the problems that AI presents. As a writer and journalist, in addition to a professor of communications, she is mindful of the value of unique voice, which some generative AI programs seek to replace. Whether it be misinformation, disinformation, plagiarism or sourcing, Prince said “there are a lot of issues that will come up which we have to be aware of.”

Some educators worry that AI has the potential to undermine learning. According to Provost Jacobs, “GAI could short-circuit a student’s capacity for deep learning if one allows GAI to replace the struggle to develop humanistic intelligence with the allure of instantaneous artificial intelligence.”

But it is not only educators who worry about the future of pedagogy. Students, too, dwell on the accessibility of AI for young people.

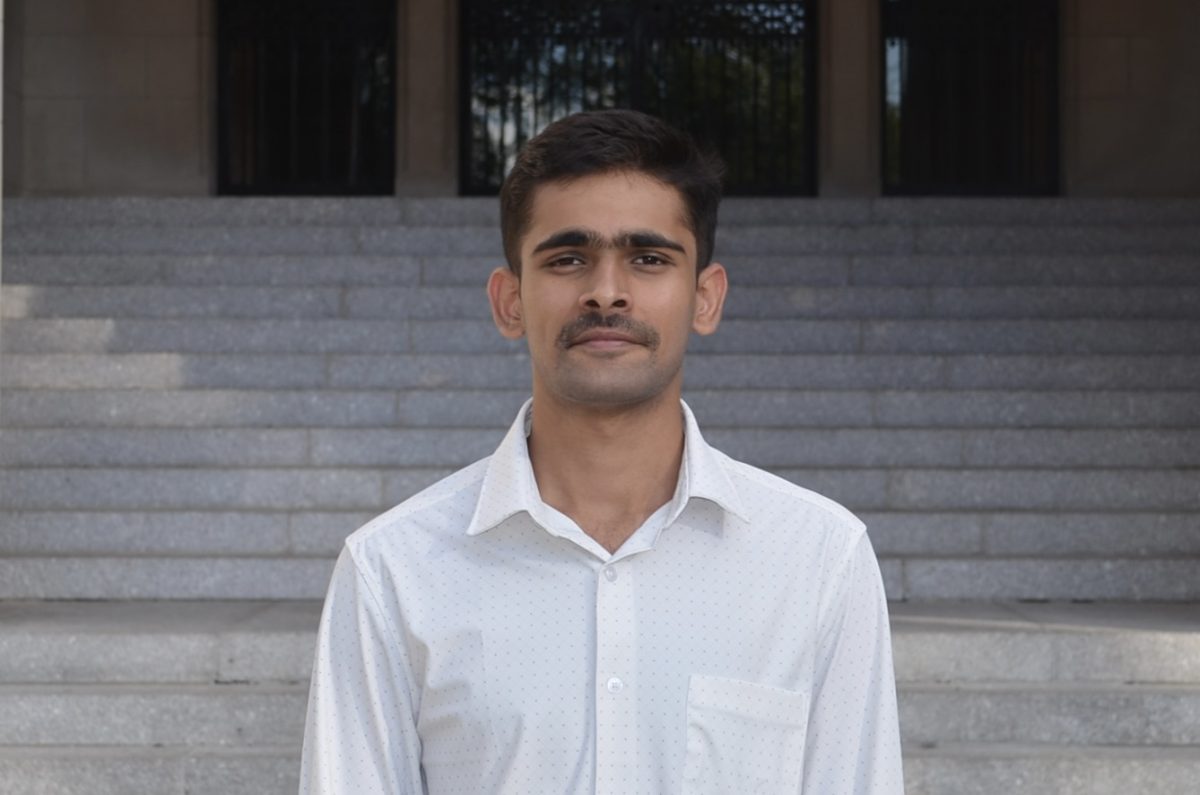

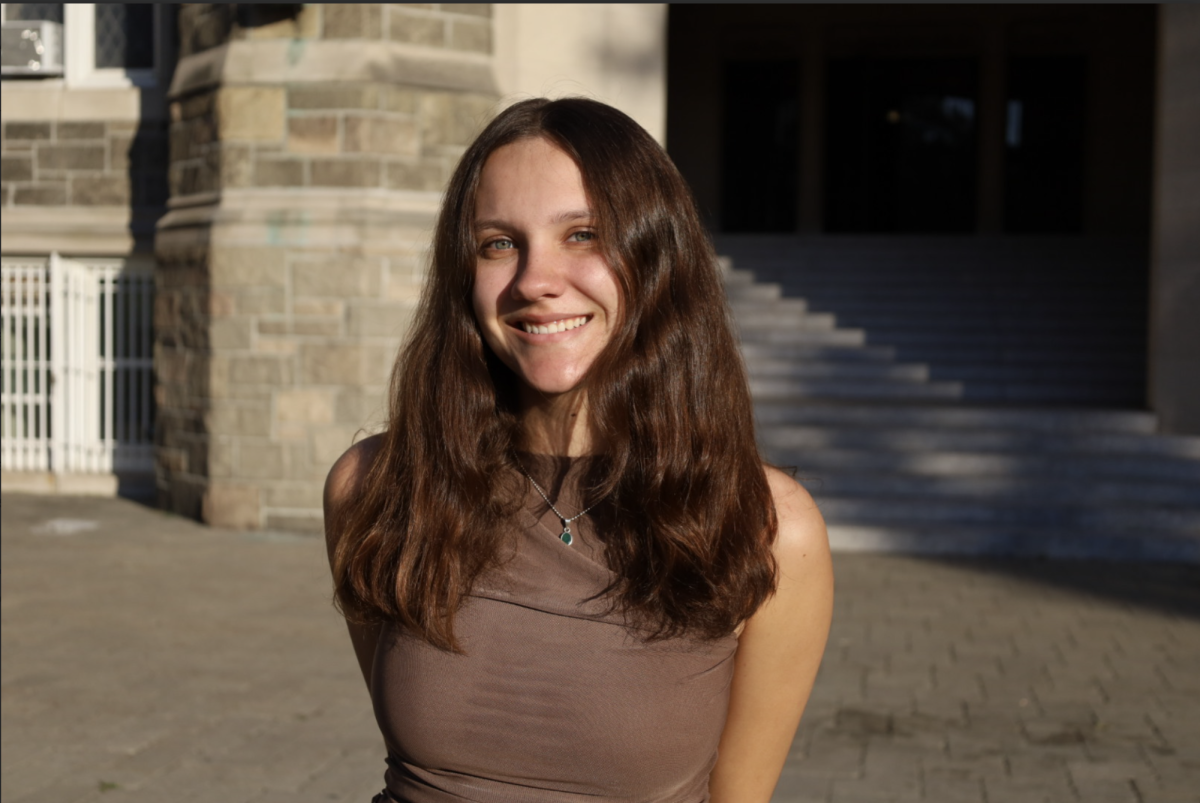

Shannon Jensen, FCRH ’24, emphasized the importance of every student building a strong educational foundation without the assistance of AI, arguing that they “need to learn how to write” and cannot be allowed to use these programs in school, especially middle and high school, without guardrails.

Hanna Giedraitis, FCRH ’24, shared Jensen’s concerns, but clarified that she does not consider AI inherently harmful or academically dishonest. “I think you should be able to use it like a more specific search engine,” Giedraitis said. “To summarize readings or brainstorm potential essay topics, but never to write the actual substance of an assignment.”

An official stance from the university is yet to be revealed to the community.