The Office of Information Technology at Fordham recently announced that they are launching Fordham-supported Artificial Intelligence (AI) tools in an email to students. They stated that the purpose of these tools is “to improve productivity and learning.” This comes after a widespread warning against the use of AI technologies in Fordham classes since their rise in popularity.

The Office of Information Technology explained that the initiative to launch Fordham-supported AI was in response to requests from the community for support of recent advances in the technological field. The new AI tools enabled for Fordham students include those introduced by Zoom, Grammarly and Google.

The newly supported AI features were enabled for students and faculty university-wide on Jan. 19, 2024, one day after the announcement of their launch. The features in each application include Zoom meeting summaries, Grammarly generative AI and the Google version of ChatGPT, Bard.

Despite the support that the Office of Information Technology has shown towards AI by enabling these features, their announcement came with clear warnings for students to proceed with caution when using AI tools, specifically advising against the input of personal information and the use of AI tools that train central models with user input.

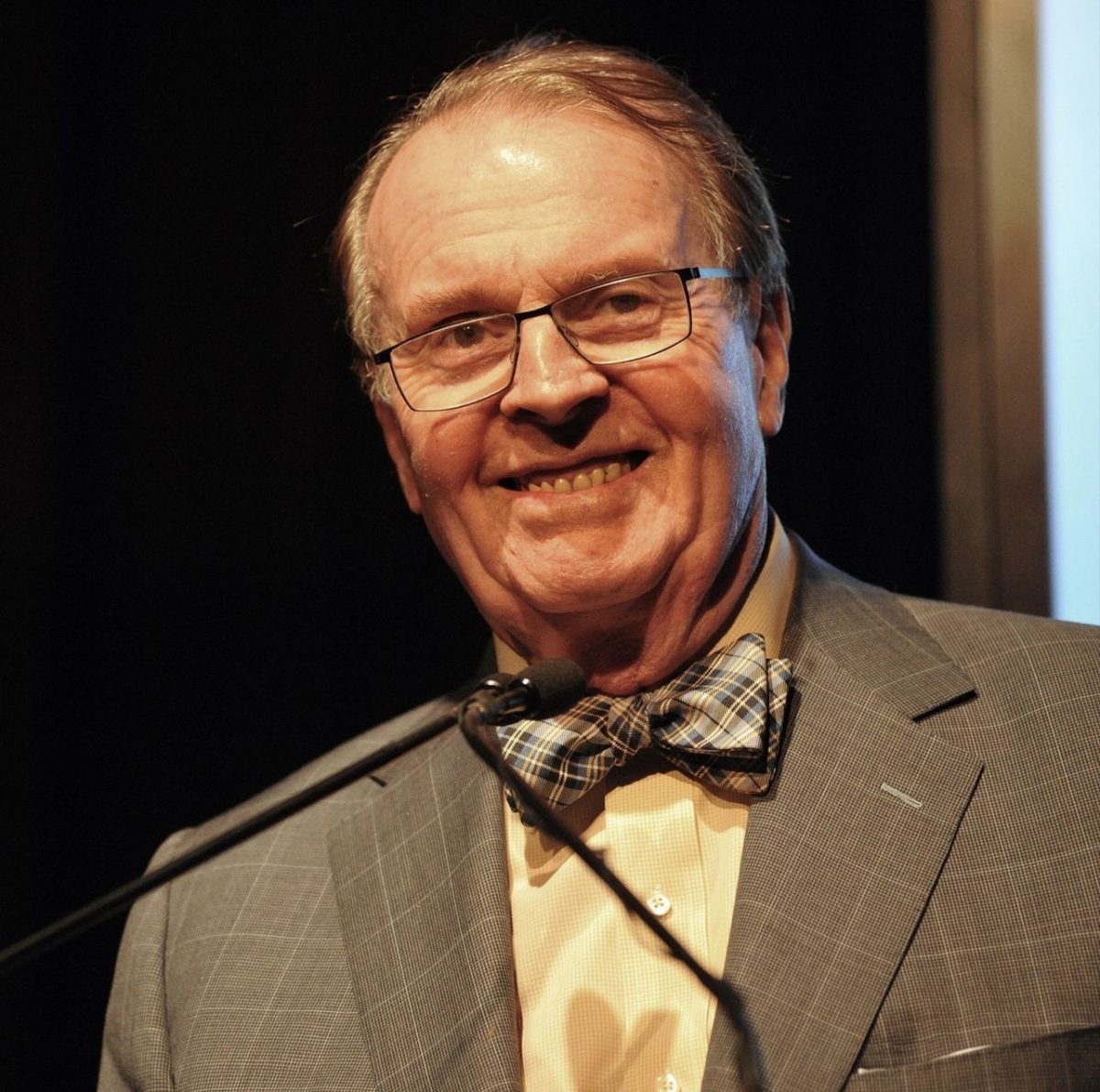

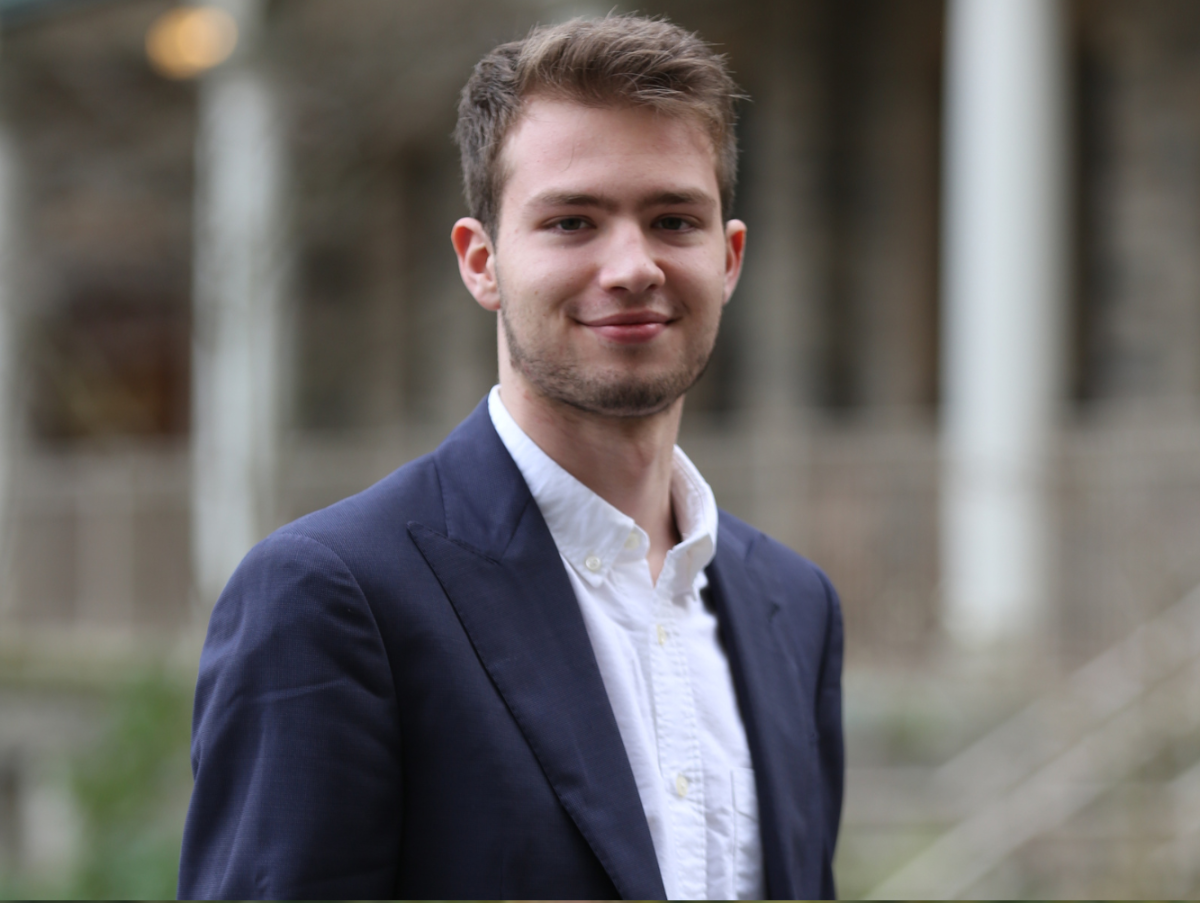

Fordham faculty seems to have received news of the newly enabled AI tools at the same time as students. When asked if they had received advanced notice, Dr. Nicholas Smyth, a professor in the philosophy department, stated, “No. I have searched through my email and haven’t been able to find any notice, and this wasn’t discussed at any meeting at which I was present. Other faculty I knew were similarly surprised by it. I guess we don’t have the right to discuss or vote on these decisions.”

It is hard to say how the launch would have gone had there been a vote amongst faculty before a decision was made. This is because different professors have a wide variety of reactions to the news of the Fordham-supported AI.

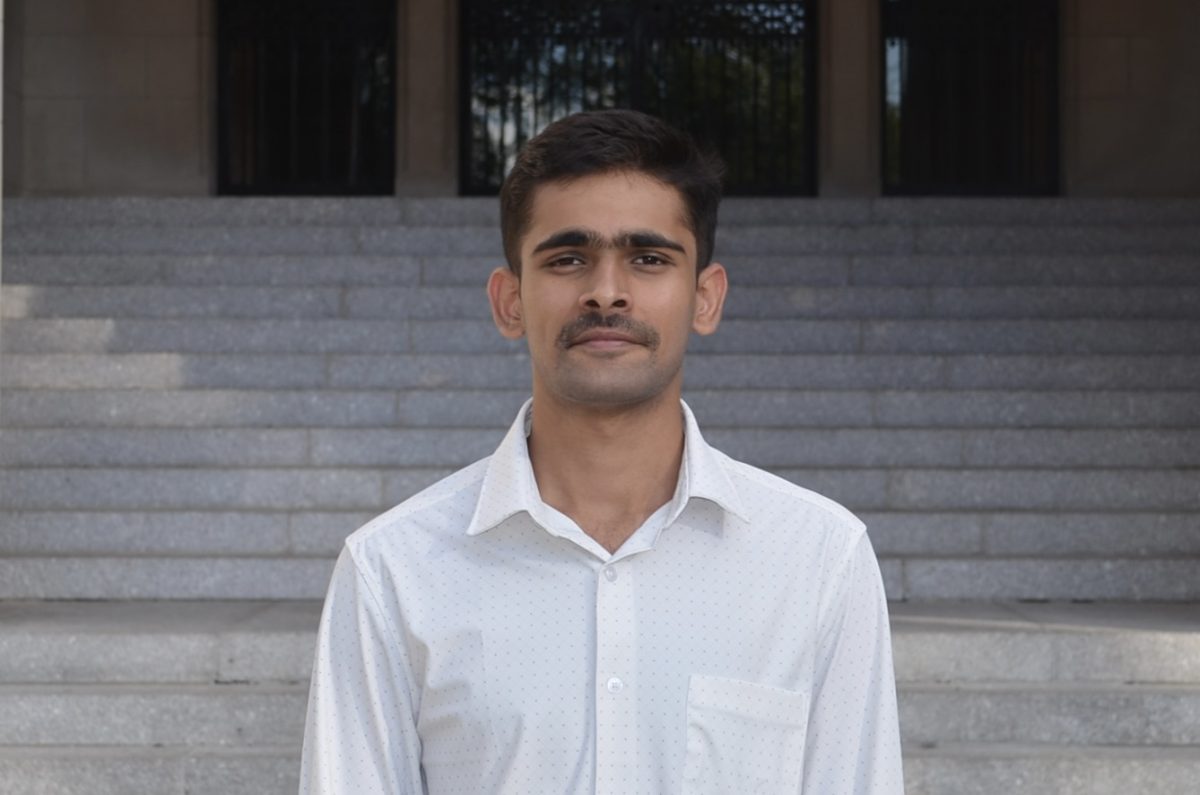

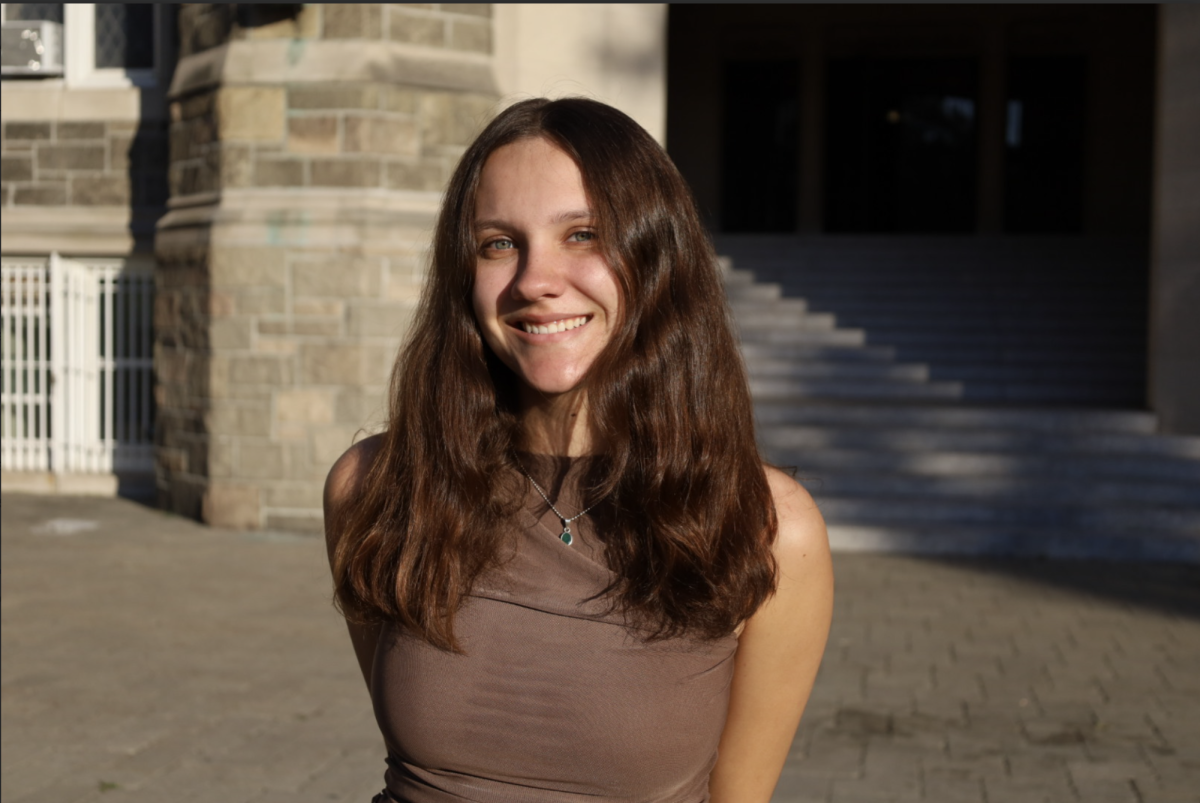

Professor Kelly Ulto, a program director in the Gabelli School of Business also said she was not given advanced notice: “I was not aware of most of these new tools until I received the email, and I am anxious to learn more.” However, she said she has a more optimistic outlook than other professors. Ulto expressed that she finds the tools to be useful in the classroom setting, for students and faculty alike, and wants to find constructive uses for the advancing technologies.

Ulto stated that the tools and IT support she has used in the classroom “has allowed me to connect with my students more effectively, especially allowing for more ways to offer feedback on assignments and support of their projects.” She cited these successes as why she feels excited to embrace new technology in her classes.

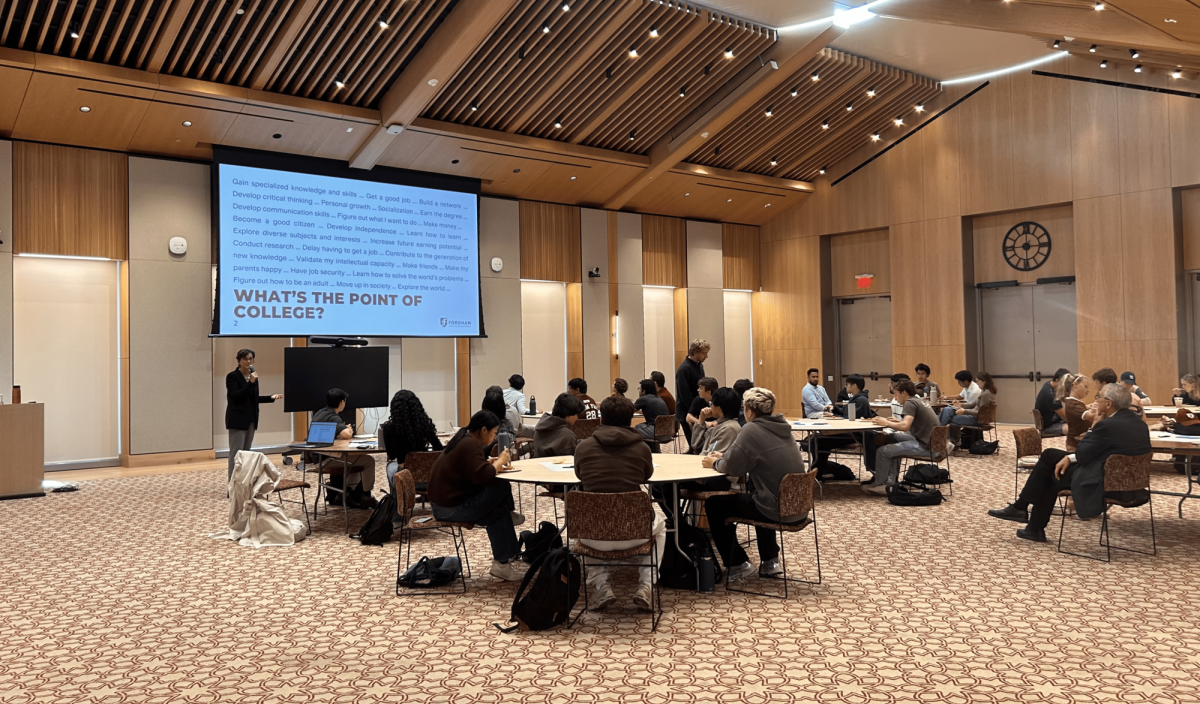

Some differences in professor opinions are due to the subjects they teach and the way AI impacts change based on the coursework. Smyth explained how his department differs from others, saying, “as a philosophy professor, my job is literally to teach people how to think. If I allow students to use a tool that does the thinking for them, I am not doing my job… While I can see the argument for AI use in the sciences, engineering and so on, here in the humanities we must do our best to cultivate these heroic young minds, and not let their thinking be directed by a giant data center in San Francisco.”

Students have gotten accustomed to seeing the strict rules surrounding the use of AI tools in class syllabi. Despite this, Smyth informed the Ram that on the faculty side of Blackboard, there are AI tools to generate essay prompts, discussion questions and auto-generated rubrics. This means that, without knowing, students may be paying their Fordham tuition only to be “taking a part human part robot class,” as Smyth put it.

Smyth asks, “What is the place of the human in the society we are building? The corporate tech bros have spoken, and their answer is: ‘On the sidelines, hopefully.’ Rams, is this your answer?”