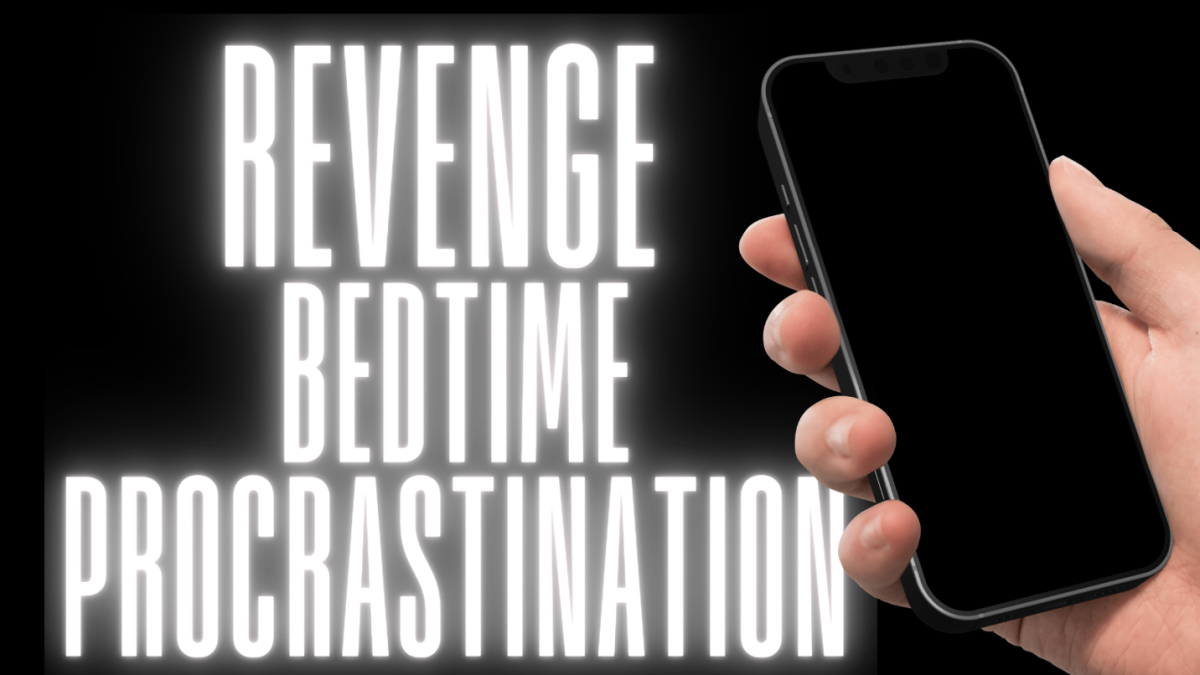

Classrooms, social media, music — artificial intelligence (AI) has encapsulated every aspect of our lives. But where do we draw the line? AI is not new to the world of politics, but AI generated political messages have most recently hit the headlines with their involvement in the election cycles of Pakistan and South Korea, offering a possible glimpse into the future of campaigns and how people vote in elections. The quick rate at which AI has enveloped our world is grounds for concern. In the age of social media, with rampant misinformation at one’s fingertips, campaign season should steer clear of using AI.

The Pakistan Tehreek-e-Insaf (PTI) party, chaired by Imran Khan, secured the majority of the Parliament seats on Feb. 8, 2024 amid rumors of election tampering. After losing Parliament’s vote of no confidence in April 2022, Khan was ousted from his role as Prime Minister. Since his arrest in August 2023 and subsequent sentencings, candidates from Khan’s PTI political party and their family members have reported being detained and intimidated by the military. There have been obstacles like internet blackouts and censoring PTI news coverage to curb support for the PTI platform. Khan and the PTI have strongly opposed the military and its interference in the political sphere.

Despite serving time in jail, Khan was still able to disseminate political speeches through artificial intelligence. Believed to be “the only one in his party who had the charisma to attract the masses,” Khan campaigned from behind bars to generate support with speeches using artificial intelligence to mimic his voice from writings he had slipped his lawyers. In Khan’s case, artificial intelligence had presented an opportunity to bypass his imprisoned reality, garner votes and speak to his supporters.

In 2022, in South Korea, the People Power Party created an AI avatar of presidential candidate Yoon Suk-yeol. The candidate’s likeness interacted with multiple voters, used slang to appeal to a younger audience and offered humor to distract from past scandals. Yoon ended up winning his election. Whereas Khan had years in the public eye as a former cricket star and politician to curate a charisma that could charm voters, Yoon had artificially enchanted voters.

Artificial intelligence offers real benefits in the world of politics. Both Khan and Yoon connected with and reached voters in a manner that secured election wins. AI tools can generate messages, ads, images, videos and speeches for campaigns. The software has become mainstream and cheap to use, cutting down on campaign costs. It can help campaigns with less funding to be able to compete with the digital teams of their wealthier opponents. The possibilities seem limitless, and AI might just be the future of politics.

Because the software has become more mainstream in recent years, virtually anyone can create media of a politician, celebrity or even someone you know. One’s images and voice are now more vulnerable to being used in an artificially generated photo or video with their likeness. With rising trends of AI being used in dubbing artists like Bad Bunny over viral TikTok songs, or in the creation of explicit, nonconsensual photos of children, adults and celebrities like Taylor Swift, the world of AI has exponentially grown with little regulation and safeguards in place to address the threat and concerns that it poses. For example, while Khan and Yoon had both demonstrated the desire to connect with voters, AI makes this connection inauthentic.

The integration of artificial intelligence into political messages and campaigning is a concern that needs to be addressed. Six years ago, filmmaker and actor Jordan Peele created a deep fake video of former President Barack Obama to warn of the dangerous capabilities of AI, and the argument still stands today. Technology has advanced, and with the accessibility of artificial intelligence, anyone can make a political message under the guise of a politician. With the rise of AI, media literacy is needed to combat disinformation, but today many find themselves unconfident in their ability to detect misinformation or choose not to investigate it further. Before the New Hampshire primary elections in January, some constituents reported a robocall of President Joe Biden’s artificially generated voice dissuading them from going to the polls to cast their vote.

In a world with widespread misinformation and a lack of media literacy, artificial intelligence, while it does offer perks, is already affecting elections. Voters are susceptible to believing the first thing they hear or see. AI is an extremely underregulated tool that threatens the future of politics. Voters have to be made aware of manipulation with AI and be able to ensure their voices are still considered in the voting process. If artificial intelligence continues to grow at a similar pace without constraints, we should consider how much we value authenticity, transparency and shared humanity.

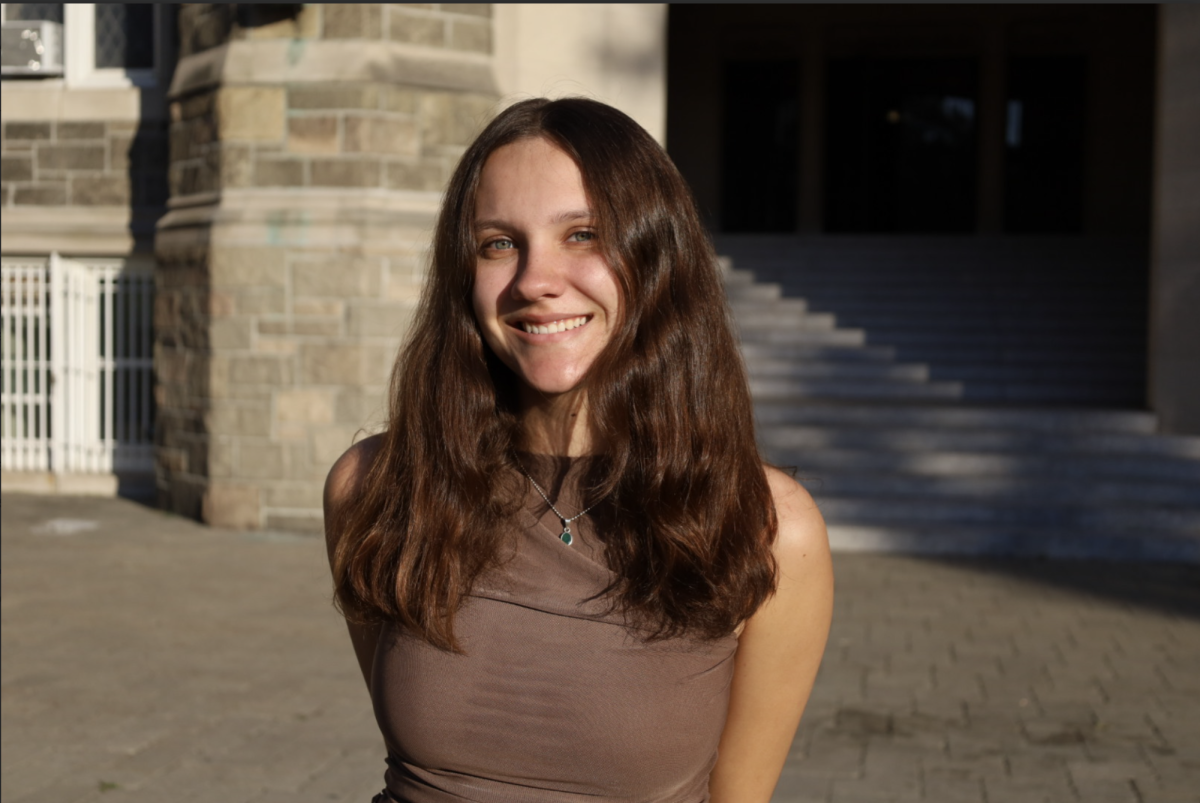

Indira Kar, FCRH ‘25 is an international studies major from St. Louis, Mo.