Most students at Fordham University have used artificial intelligence (AI) before. It could have been for organization, to create a study guide or to come up with an answer to a simple question. While it may be useful in these regards, it’s far from being useful or efficient in a classroom setting. With professors understanding what AI can do, one of two things happen; either students get more papers from our classes or take monitored exams to prove our understanding of the class’ content.

Due to this, a student’s workload increases, and they’re pushed towards using AI to summarize any given reading, solve math problems or figure out whatever they need to in order to pass the class. The tradeoff of using AI is that you rob yourself of actually learning the content. Part of Fordham’s mission statement says the university “seeks to foster…careful observation, critical thinking, creativity, moral reflection and articulate expression.” AI undermines these beliefs by giving students an answer to the questions students are meant to ask themselves as they complete their four years here.

AI tramples our creativity, and its answers are lackluster. Let’s say one is stuck in responding to a prompt given to them. They can go to ChatGPT and ask it to come up with an answer to the prompt. If the student likes it, they can take the response, put it in their own words to get past AI detectors and get full credit for the response. There are two things wrong with this: one, it is plagiarism, and two, the student is robbing themselves of the chance to properly practice and learn how to answer the question being posed. Furthermore, AI writing is lackluster in terms of quality. Professor Hittner-Cunningham, who teaches Composition II, put it like this: “It’s very clear when it’s AI-generated since it speaks in generality.” If our writing ends up being unimaginative, we can’t properly express ourselves in a way that the curriculum at Fordham encourages us to. Professors, for the most part, appreciate when students are creative with their writing rather than spitting out what’s already been said before in a textbook. AI overlooks the creativity and depth needed in order to produce something powerful for any given task.

AI also gives dangerous information if you take it at face value. When searching for something on Google, you’ll most likely get an AI overview of what you’re looking for, but in some cases, they’re misleading and dangerous. While these are more easily spotted, it can lead to more dangerous outputs if left alone. Language Learning Models (LLMs) are the main type of Generative AI students use and include such programs as ChatGPT, Google Gemini, QuillBot, etc. These forms of AI operate on human inputs, and their outputs depend on the information they already have, but can still output misinformation or things that simply do not exist.

Due to this misinformation, AI is better used for mundane tasks or things that deserve to be optimized, such as schedule building or study guide creation based on a student’s notes. When used like this, it’s responsible and doesn’t take away from learning. When AI is used like this, it can exist in Fordham’s mission, but not when it’s used as a shortcut.

AI is biased as well. When developers create AI, it typically demonstrates a bias, some more clear than others. RightWingGPT shows its bias on its chest, but its reasoning for this was because ChatGPT showed a bias as well. Regardless of what AI we choose to use as a student body, it’ll show a bias one way or another.

If AI is biased, undermines creativity and gives out misinformation, wouldn’t that automatically make AI bad? Not necessarily. AI runs much deeper than that and it really does come down to the context of how it’s being used. It’s good for coming up with the basics about any situation that allows us to understand things further. I’m not against students using AI if it’s actually helping them get to the answer rather than giving them the answer. If we’re going to be responsible with it, I’m all in. However, when it is used in a classroom setting where you’re meant to be learning something and understanding it in your own way, AI does pose a problem if we all choose to interpret one thing the same.

If AI can beat this shortcoming and allow students to interpret the same concept differently, I can get behind it, but the way it stands, I don’t see it fitting properly at Fordham.

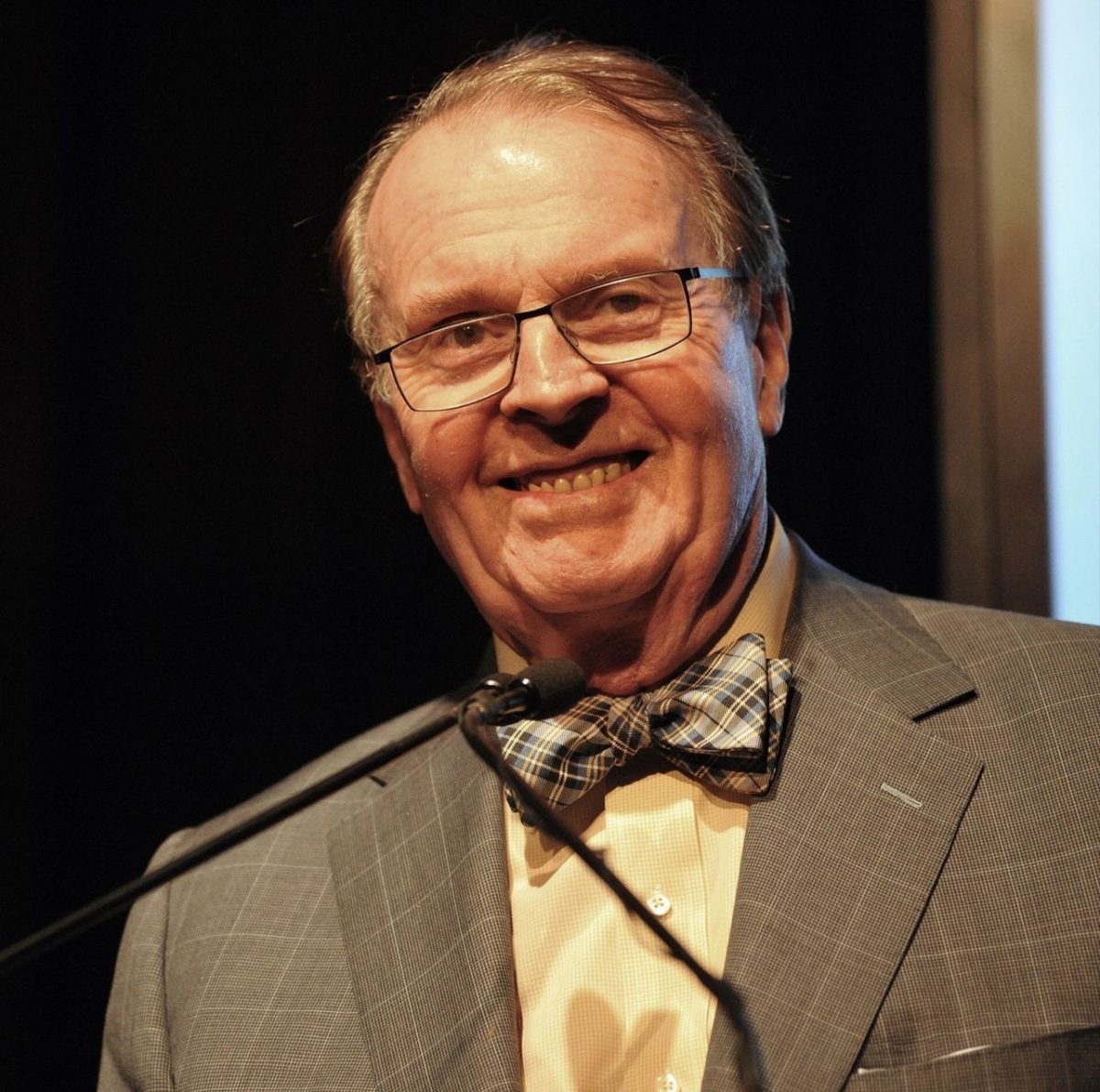

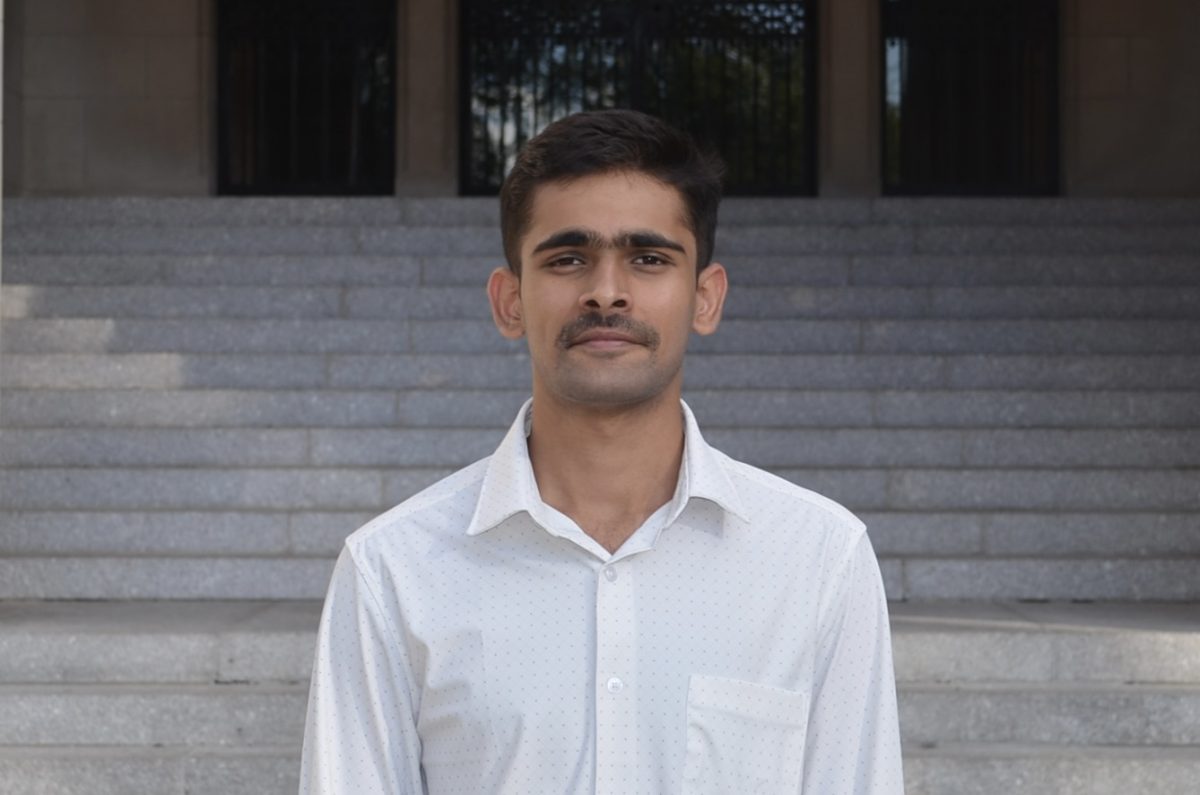

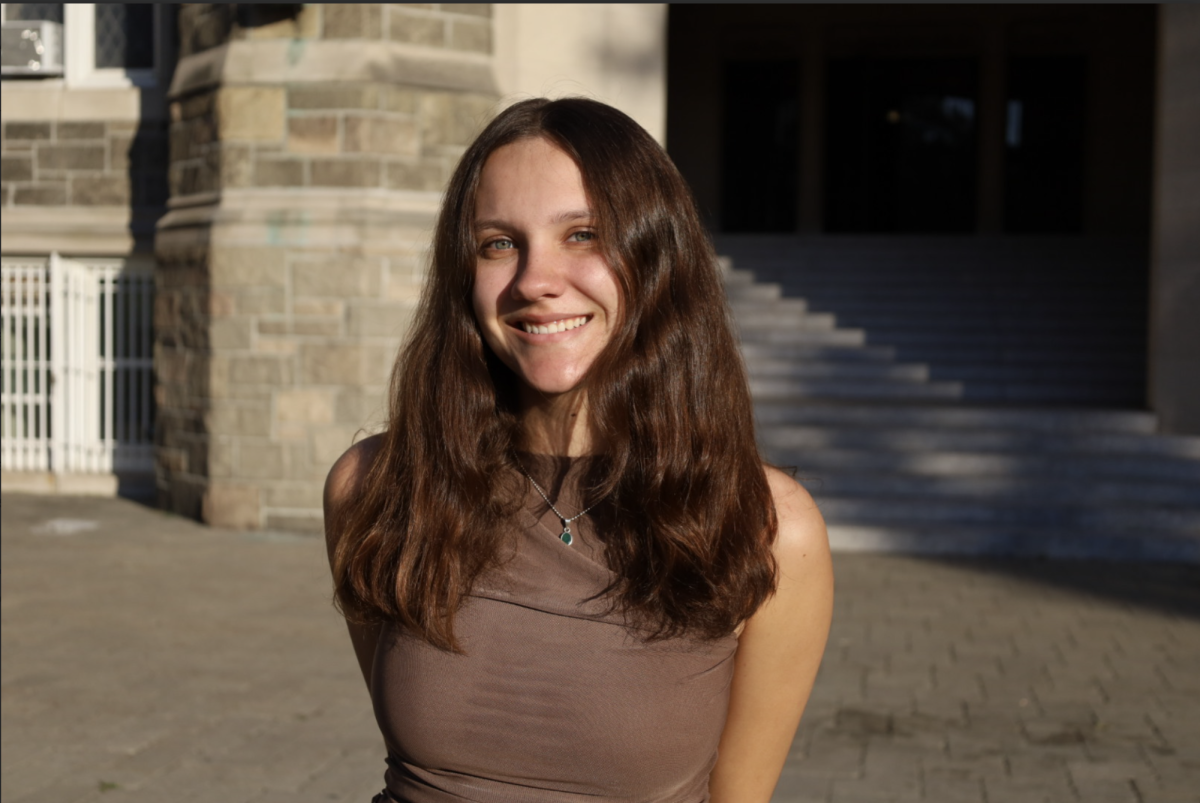

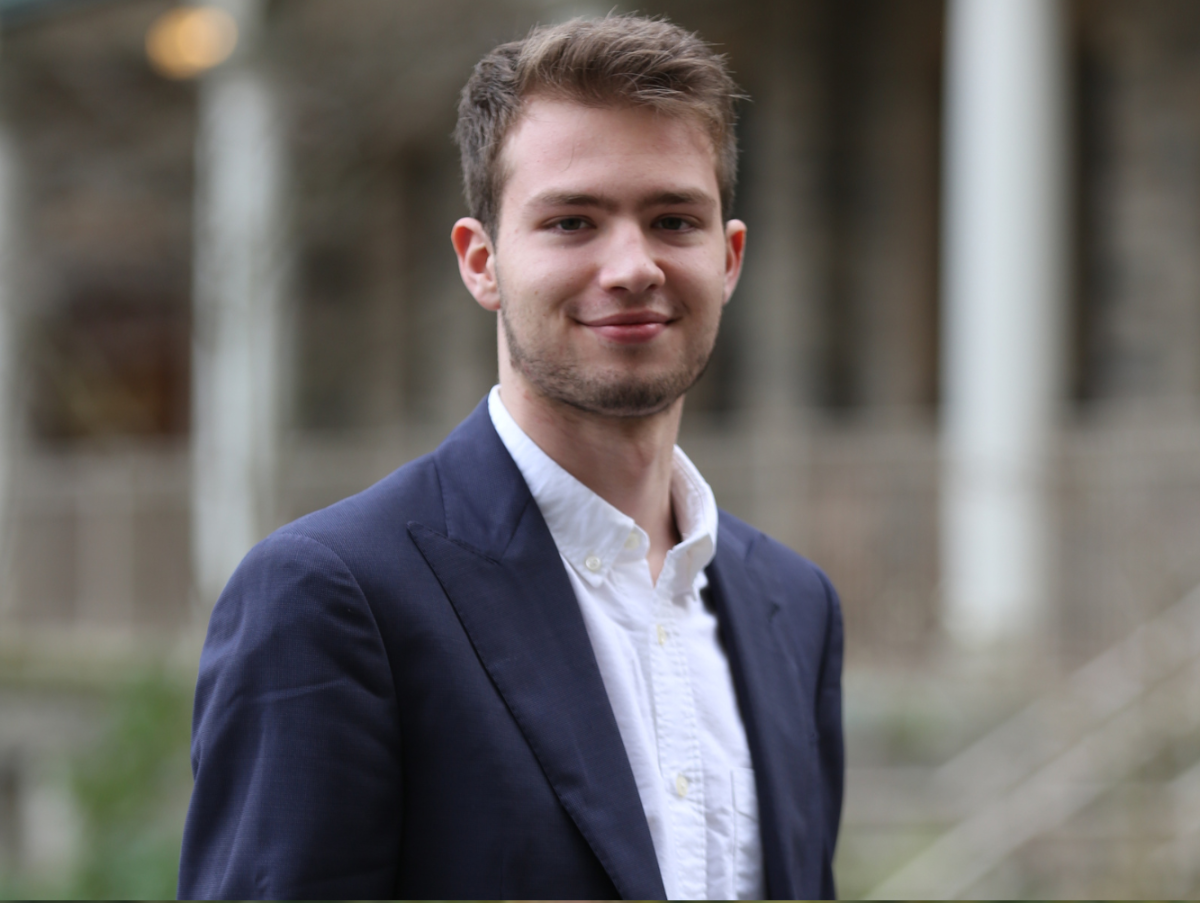

Gabriel Capellan, FCRH ’28, is a journalism major from The Bronx, NY.