Defined as the creation of “intelligent machines” or “intelligent computer programs,” artificial intelligence (AI) is a development whose scale often draws comparisons to the internet, which, paradoxically, it is on track to replace. Professionals at all levels and fields, including doctors and educators, are at odds with the possible benefits and threats that AI poses to their field. Concern is prominent even at national and international levels; last month, President Joe Biden signed an executive order mandating corporations report how their systems could be weaponized in conflict. In Britain, Prime Minister Rishi Sunak organized an international conference in London (attended by Vice President Kamala Harris and Elon Musk) on preparing for security threats AI could pose.

In education, AI has been sounding alarms and inciting intrigue both in K-12 schools and academic institutions. Educators in schools find themselves concerned about the issue of academic integrity, especially since it is often difficult to detect the use of AI programs. At the same time, students and teachers have found it useful to prepare for schoolwork and lesson plans.

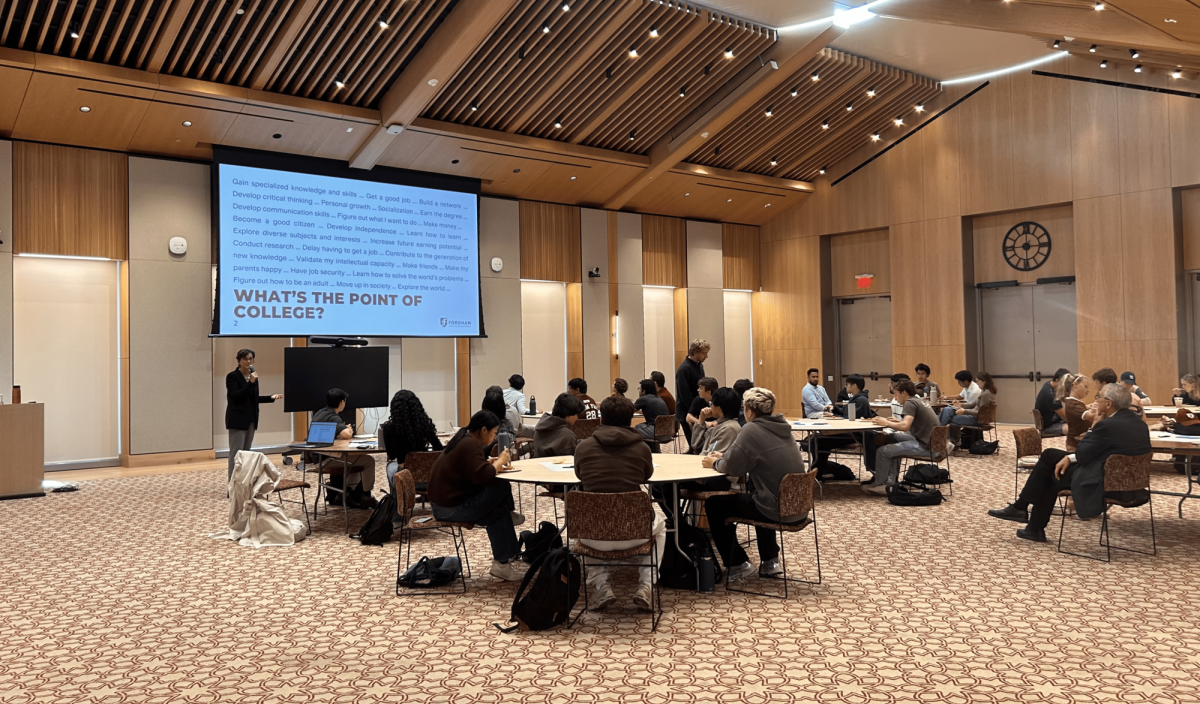

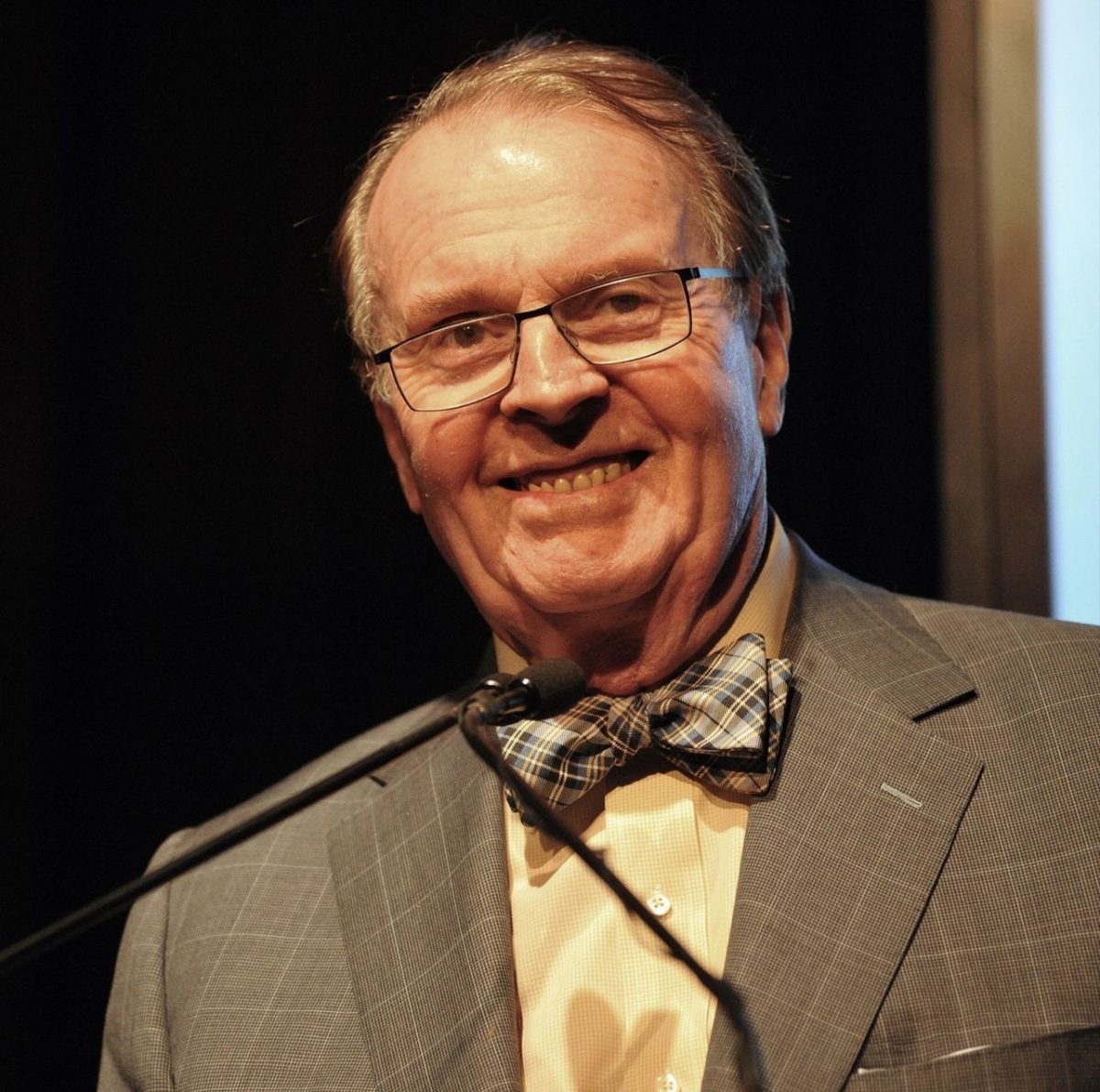

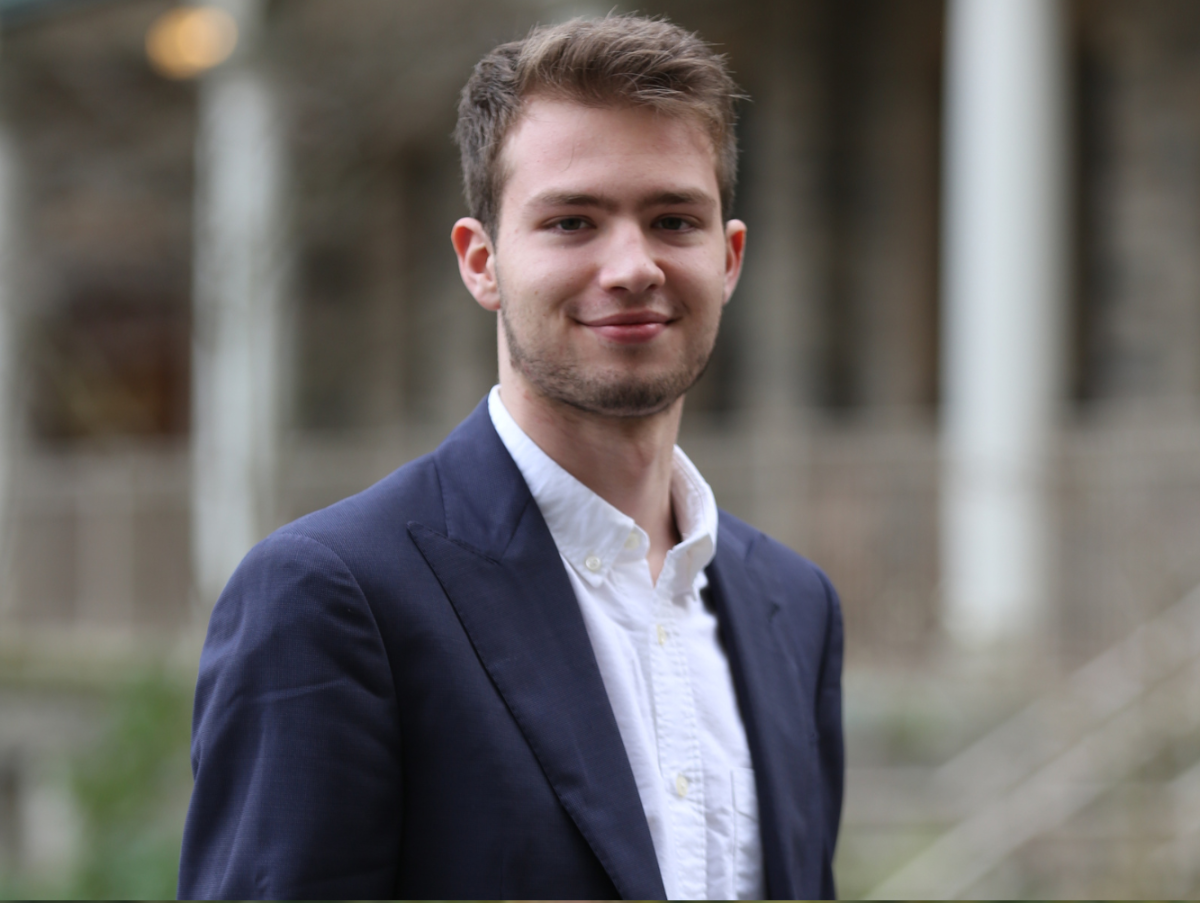

Academia, particularly the humanities, finds itself distinctly at odds with the advent of AI. In departments at Fordham, AI is a highly debated issue, with divided opinions on its utility and implications on future study. Corey McEleney, professor of English at Fordham, said that “the responses among humanities professors have ranged from apocalyptic resignation (‘Oh no, we’re doomed as a field and as a society’) to defiance (‘There are things a computer algorithm cannot do and it is on that basis that we should promote the humanities’).”

On the other hand, some professors recognize the potential for its use in research. The idea that professional work of careful analysis and uncovering can be condensed into a question fed into ChatGPT poses serious concerns for academia. Professors and students alike grapple with the challenges of academic integrity and the supposed inutility of humanities degrees. At the same time, the possibilities for research, learning concepts and information sorting are unexplored and can seem promising. A central question remains: can the machinations of an online software provide any valid venue to deeply understanding the humanities?

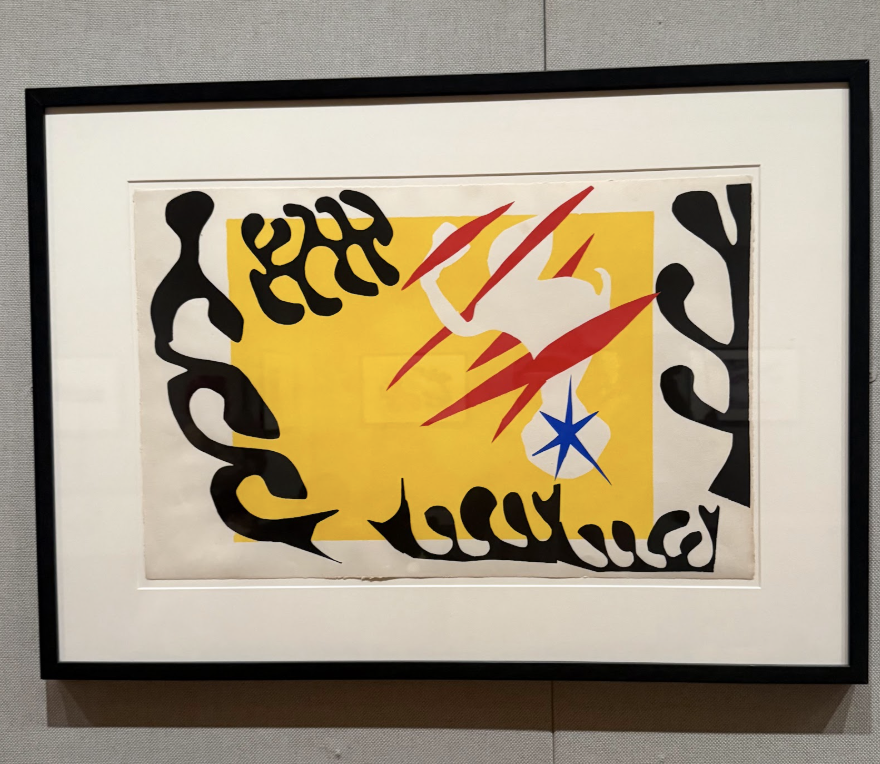

It can’t be denied that there’s something unnatural about a prescribed answer to humanities fields such as history or English, which are founded on processes of creation and excavation. Examine what it takes to learn a craft — whether that’s playing the violin, writing a story, fielding a hockey stick or picking up Italian. The process of learning is valuable because we weren’t born with these skills to begin with — the end isn’t natural or known to us, but is arrived at through time, trial and error. We develop relationships to our craft, and also to ourselves and to others in the process. Childhood activities often come with memories of teams and coaches, achievements and struggles.

Cultivations of creativity are valuable not just because of the new abilities they provide us with, but also the new selves we form in the process. Process creates relationships between student and subject matter as well as student and self — and AI is all too easily posed to destroy these. When a student uses AI to generate answers or to answer an essay, they remove themselves from the process of craft. It’s a great loss that isolates them from a deeper relationship to the texts and themselves.

Going further, AI hampers human empathization, or our relationships with others. Humanities disciplines such as history, English and theology (though discussed with an objective level in the classroom) have a key ability to ignite personal connections in a way that technical matters of chemistry and biology may struggle to do. These personal connections and interests are often only paid attention to in humanities classes, where students process their relationships to text in conversation or writing. AI substitutes itself for human thinking that strengthens emotional relationships to text and others.

In short, we dull both the profits of process and human ability of empathization when we prioritize shortcuts and simplicity. Though AI may promise benefits such as learning support and source identification, they cannot function as substitutes for process and empathization that are key to the humanities.

At many universities, including Fordham, the use of AI is left up to the discretion of the instructor, leading to various approaches on behalf of instructors. McEleney maintains a strict ban in his classroom on the basis that “it’s incapable of performing the kind of high-level critical, analytical, and interpretive work involved in humanities courses.” Orit Avishai-Bentovim, professor of sociology at Fordham, on the other hand, allows for the use of AI on the condition of honesty: “I see it as a tool, as any other, that one may use to advance their learning journey but which one has to be honest about. Thinking about the humanities and the social sciences, at least for now, students need to know how to write, sift through information, line up evidence, make an argument.”

A diversity of policies, for the time being, is the best way to move forward with AI in academia. This choice prioritizes individual discretion and critical thinking on the part of the professor — and aren’t these the very issues that AI puts in jeopardy? In a world where simplicity and shortcuts are within very easy reach, this approach places upon academia a burden of thought and procedure that highlights the professor as an independent. Human voices emerge as the arbiters of their own history, art, literature and, hopefully, their own future.

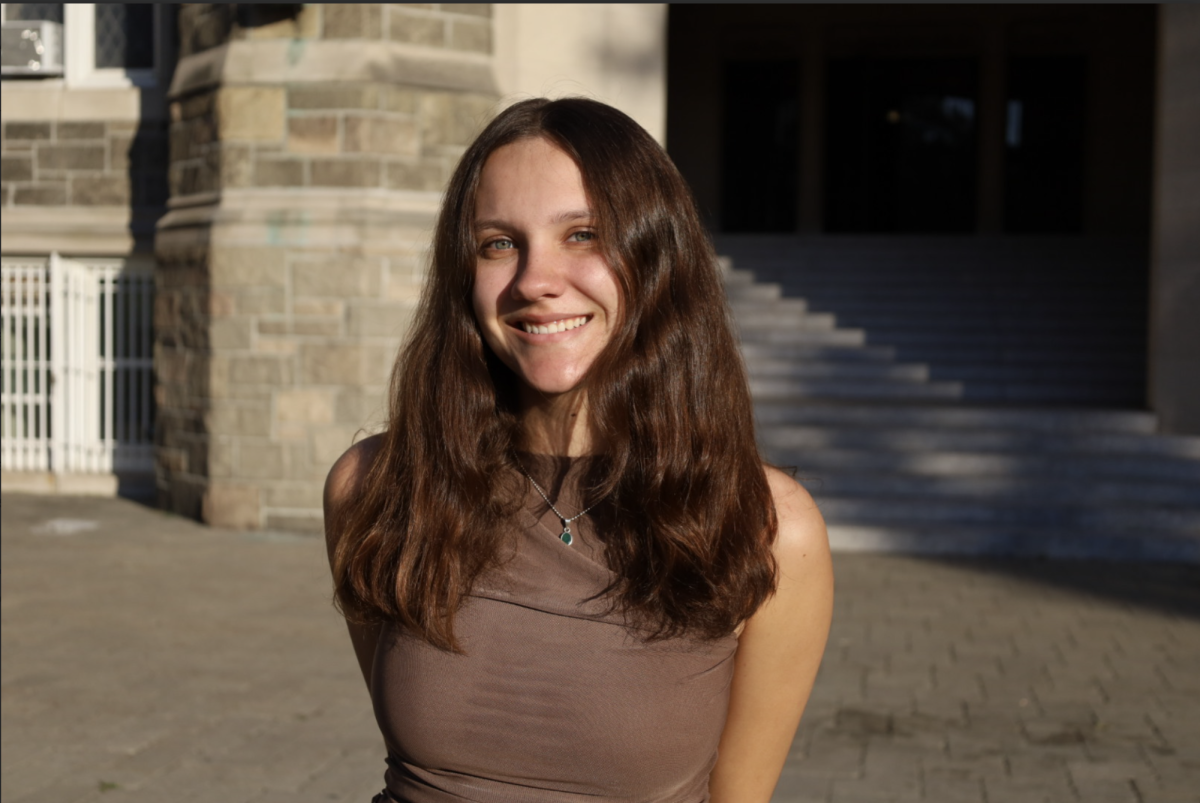

Adithi Vimalanathan, FCRH ’26, is an economics and English major from Jersey City. N.J.