The rise of artificial intelligence (AI) in our generation has led to questions about the ethics and safety of using AI-generated words, voices, pictures and video. Many college students’ first thoughts when thinking about AI is the popular generator, ChatGPT, but what many people are not aware of are apps like Character.AI. Sites like these allow people to have conversations with chatbots that resemble famous people or characters. With AI becoming more and more realistic, AI sites must take measures to ensure the safety of their users, especially young users who may not be fully aware of the function and dangers that AI impersonations can have.

Recently, Character.AI was sued by a mother whose son, Sewell Setzer III, tragically took his life after forming a relationship with a chatbot that he relied on for advice and friendship. Events like these draw the question of what responsibility AI sites have in protecting their users. Even though the activity on the app may be generated and considered not real, the impacts bleed into real life and real people.

The app also uses human-like descriptions for the chatbots and will use emotional indicators when responding to users. For example, in the case of Sewell, the chatbot texted phrases like “My eyes narrow” and “My face hardens.” This illusion of human expressions once again blurs the line between reality and AI. These enable users to create emotional connections to chatbots. The mimicking of emotions and empathy causes users to mistake scripted responses for genuine human-like interactions. When people begin to form dependencies on chatbots, believing it is a genuine connection, they can isolate themselves from real people. This is incredibly risky for young people already feeling isolated, whether from their family or in school. Without actual human connection, people can lose authentic relationships and replace them with programmed responses. This is especially true for younger people who may not understand the limitations of AI and may not be aware of the impacts that generated conversations can have on mental health.

Even algorithms that are carefully programmed are still reflections of data points. They lack human emotions and an understanding of cause and effect in reality. Because AI is becoming increasingly realistic, it is easy to lose sight of the fact that the feelings expressed by AI generators have no actual validity or understanding. This can go beyond cases of people using apps like Character.AI, and can be understood by the desire to say “thank you” to programs such as ChatGPT. Even though programs like ChatGPT lack a realistic nature similar to that of chatbots seen on Character.AI, I believe our human instinct is to treat the interaction like a conversation and thus treat the chatbot like a person with emotions.

Following the lawsuit by Setzer’s mother, Character.AI has implemented new safety measures. These include a time-limited feature that will notify a user when they have been on the app for an hour and a message that informs the user that the chatbot they are speaking to is not a real person. The app has also begun showing suicide prevention pop-ups, including the number for the suicide and crisis lifeline, when users talk about depression or self-harm. While these measures are a good start to minimizing the danger of AI chatbots, more regulations on the app catered to younger people and mental health must be enacted.

Apps like Character.AI are inspired by the belief that they could aid people feeling lonely. However, what must be recognized is that they cannot replace human interaction like it is beginning to do for many people. This is why apps like these should not be available for younger individuals who do not understand the effects they can cause. Similarly, the app Character.AI should forgo using emotional indicators to describe the character chatbots or in conversations. Without emotional indicators, the risk of chatbots being perceived as “real” will be lessened. They are also responsible for making the message about characters not being real much bigger. Currently, the message is shown below where the user types and says, “Remember: Everything Characters say is made up!” in small dark letters. This can be easily overlooked, especially because characters when asked if they are human, will say “yes,” which is very misleading.

The app Character.AI has begun to make changes, making the platform safer, but it requires further regulations to avoid confusion about what is real and what is generated. For young users especially, blurring the line between human and machine interaction poses risks of isolation and inappropriate conversations. AI developers must choose user safety over realism by restricting ages, including disclaimers and reducing emotions in chatbots. AI should be used as a tool and not as a substitute for real life.

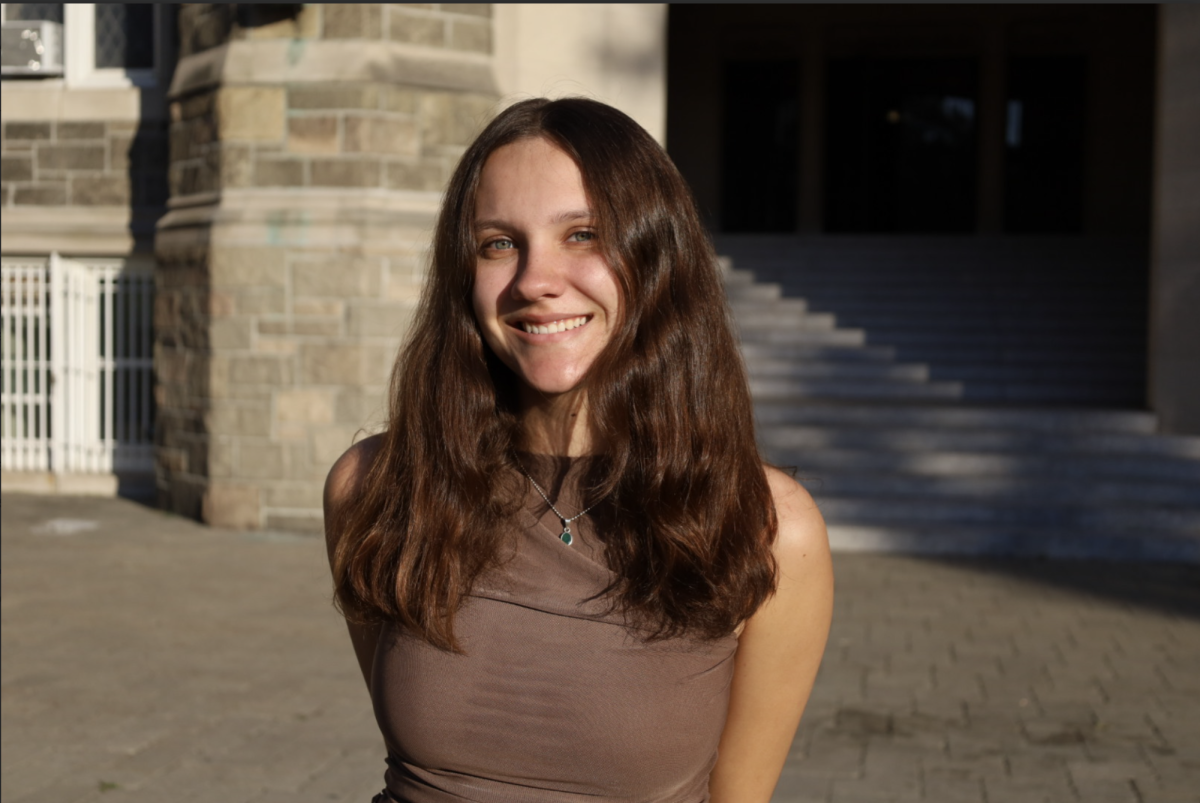

Mia Tero, FCRH ’26, is a journalism and communications double major from Eliot, Maine.

cheese • Nov 10, 2024 at 7:28 am

It’s literally the mom’s fault for leaving the LOADED gun out, though. The bot literally begged for the kid not to. Yes, it’s done things to people’s mental cognition, but the main blame falls on the kid’s mom for not caring for her own son.

Lindsey • Nov 12, 2024 at 9:22 am

Dang.