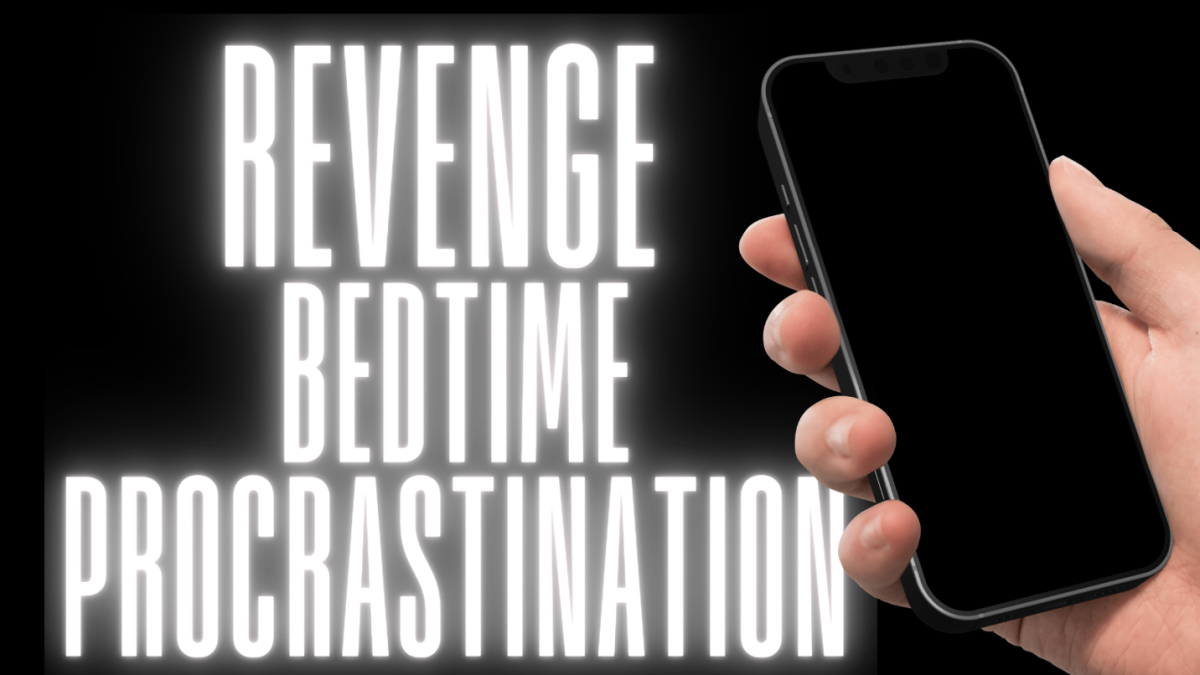

In the world of rapidly evolving technology, humans interact with artificial intelligence (AI) more frequently than they are aware. Phones that unlock using facial recognition and autocorrect on mobile devices are examples of everyday AI. These are functions that many people engage with habitually, but as AI gains traction and continues to evolve, more and more companies are bringing it into their workplaces. The newest concern for many is the use of AI in hiring practices.

In recent decades, with the emergence of online applications, the number of resumes being scanned by AI systems has increased. Using applicant tracking systems, many resumes are rejected before they even reach human eyes. These technologies are still in their early stages and many who have interacted with them have negative feedback. Interviewees who expected a human voice and face for their job interviews have been left shocked after a chat with a “Siri-like” bot.

Earlier this year, Ty, an anonymous person in the process of applying for new jobs, spoke with The Guardian about their less-than-positive experience in an AI-led interview. Ty said that they realized their interviewer was actually a robot who did not understand when Ty was still talking. “After cutting me off, the AI would respond, ‘Great! Sounds good! Perfect!’ and move on to the next question,” Ty said. Adele Watson, a journalist located in the United Kingdom, recently had a similar experience. She said that when she joined the online interview, she was surprised to be in a chat room with just herself, “In this case, I was just talking to myself — or an AI system — with no measure of how well I was doing. I couldn’t read anyone’s face, body language or see them nod yes. That small type of human reassurance that you get in a real interview is completely lost when companies outsource interviews to AI.” Watson did not get a second interview.

The video “Future of Work: Artificially Intelligent Hiring,” released in 2022 by Bloomberg L.P., includes testimonials from various professionals in the AI field. The video explained how biases in hiring software can arise, stating that the technology trains itself based on who they view as good or bad from previous hires and may effectively be making biases more efficient. Professionals like Gracy Sarkissian, director of NYU’s Wasserman Center, say that resumes should be constructed in a style that is comprehensible to a computer but appealing to a human reader if it advances to that stage. Another pitfall of AI hiring is the inability to explain gaps in employment — if a resume is read by a person, an applicant may be able to justify time off in later discussions rather than being scrapped because the AI categorized a two-year gap as “bad.”

In the previously mentioned Bloomberg video, Albert Fox Cahn, founder of the Surveillance Tech Oversight Project, talks about the dangers of the unregulated use of AI in hiring processes. His company’s slogan is “We Watch the Watchers.” He wisely points out, “We don’t trust companies to self regulate when it comes to pollution, we don’t trust them to self regulate when it comes to workplace comp [sic], why on earth would we trust them to self regulate AI?” Raising this question reminds the viewer that due to the lack of clear laws or policies, companies are not yet required to disclose if they are using AI in their hiring. While there are spaces in which AI use is overwhelmingly positive and beneficial, hiring processes should maintain their human integrity. When the career and future of an individual is on the line, it should not be up to a computer to decide whether or not they are worthy.

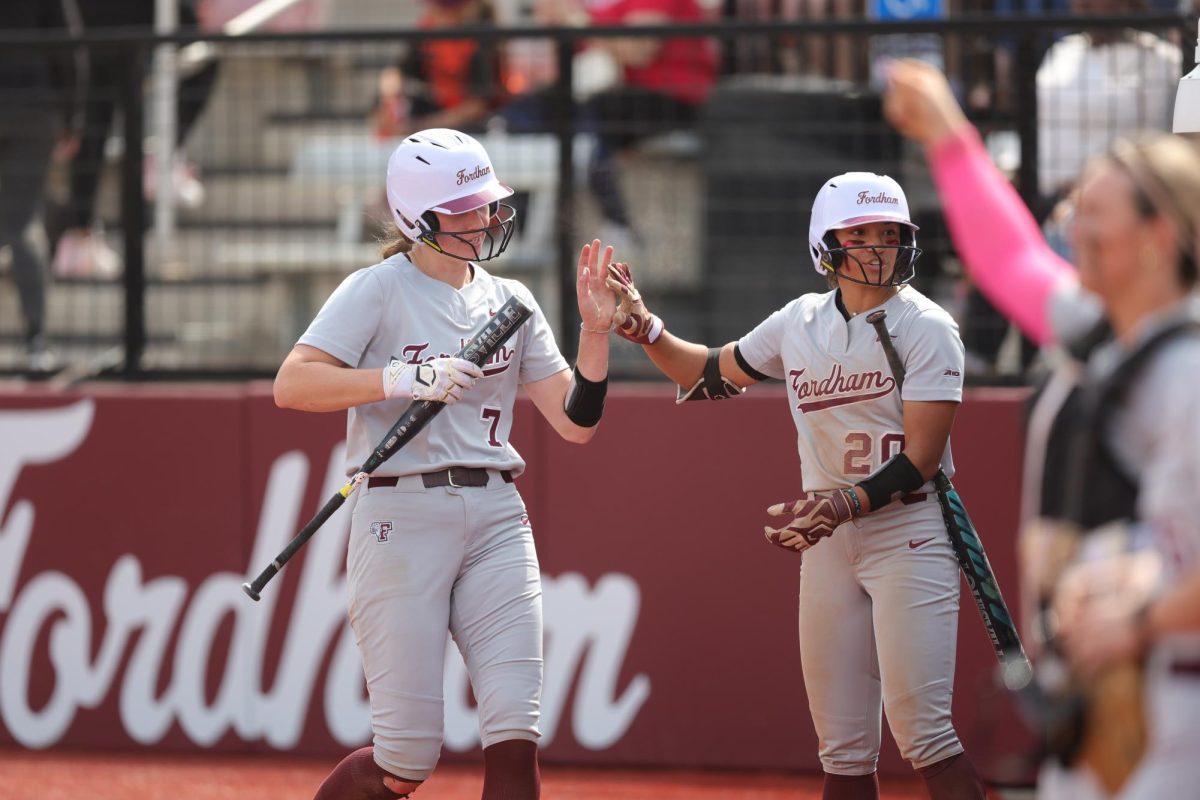

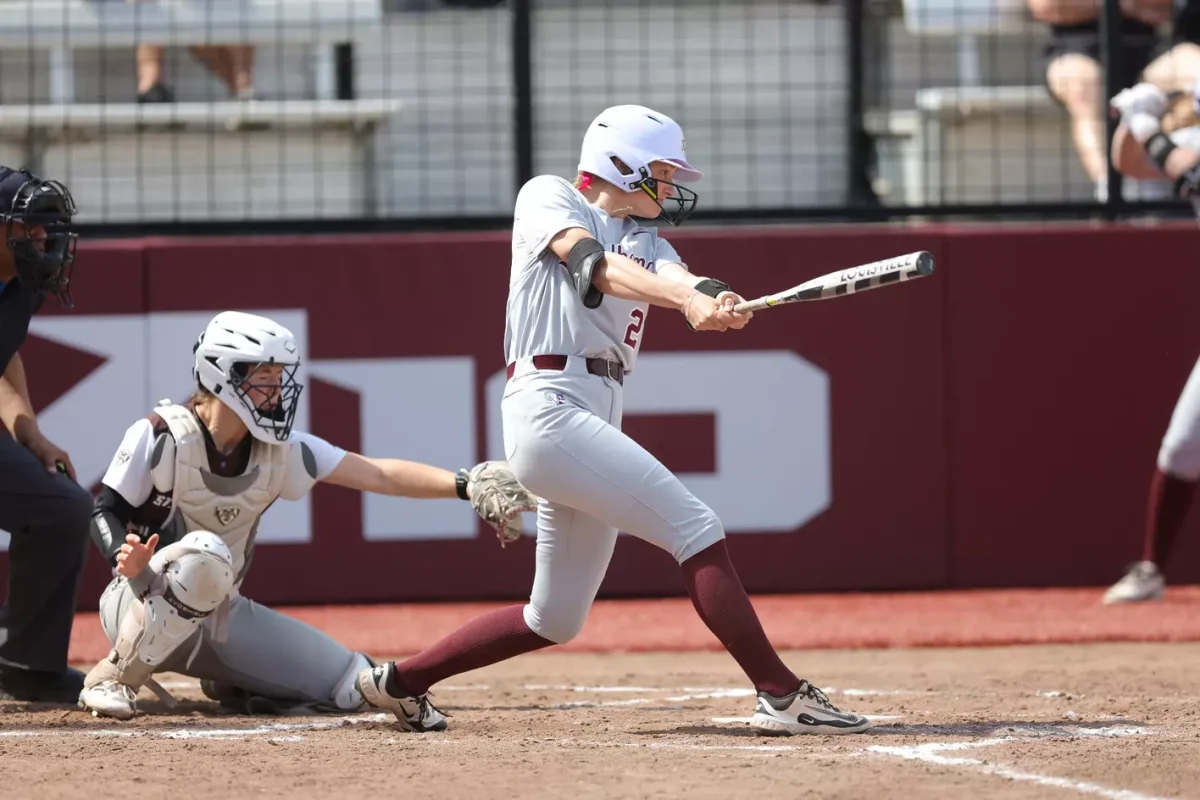

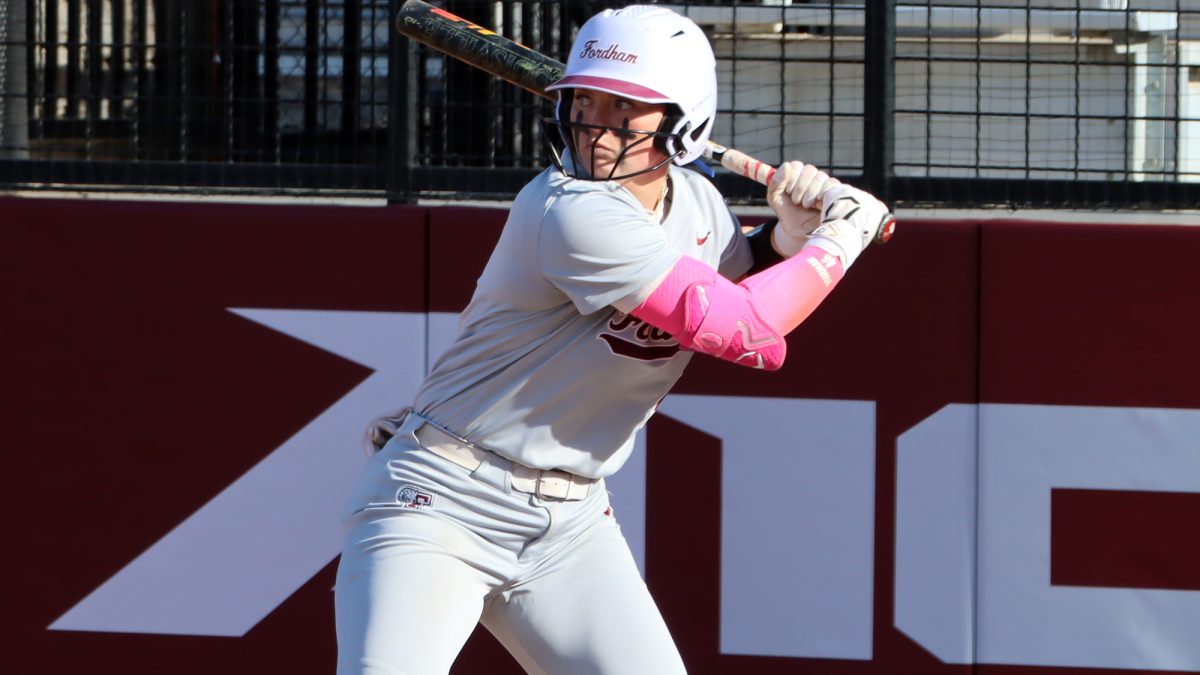

These algorithms learn based on previous hiring practices, so when biases are shown by the human hand, the programs learn and snowball them into methods that seem logical to them. Many have shown weaknesses in hiring women or people with “Black-sounding names.” Hilke Schellmann, the author of “How AI Can Hijack Your Career and Steal Your Future,” shared that marginalized groups can frequently “fall through the cracks, because they have different hobbies, they went to different schools.” Various companies have had to scrap AI recruiting when it was determined that they had cultivated biases against people of color or systematically rejected resumes with more “feminine” activities, even including sports such as softball. Without adequate regulations or policies surrounding AI hiring, hires like this could continue to be a trend. Is this what we want the future of our workplaces to be?

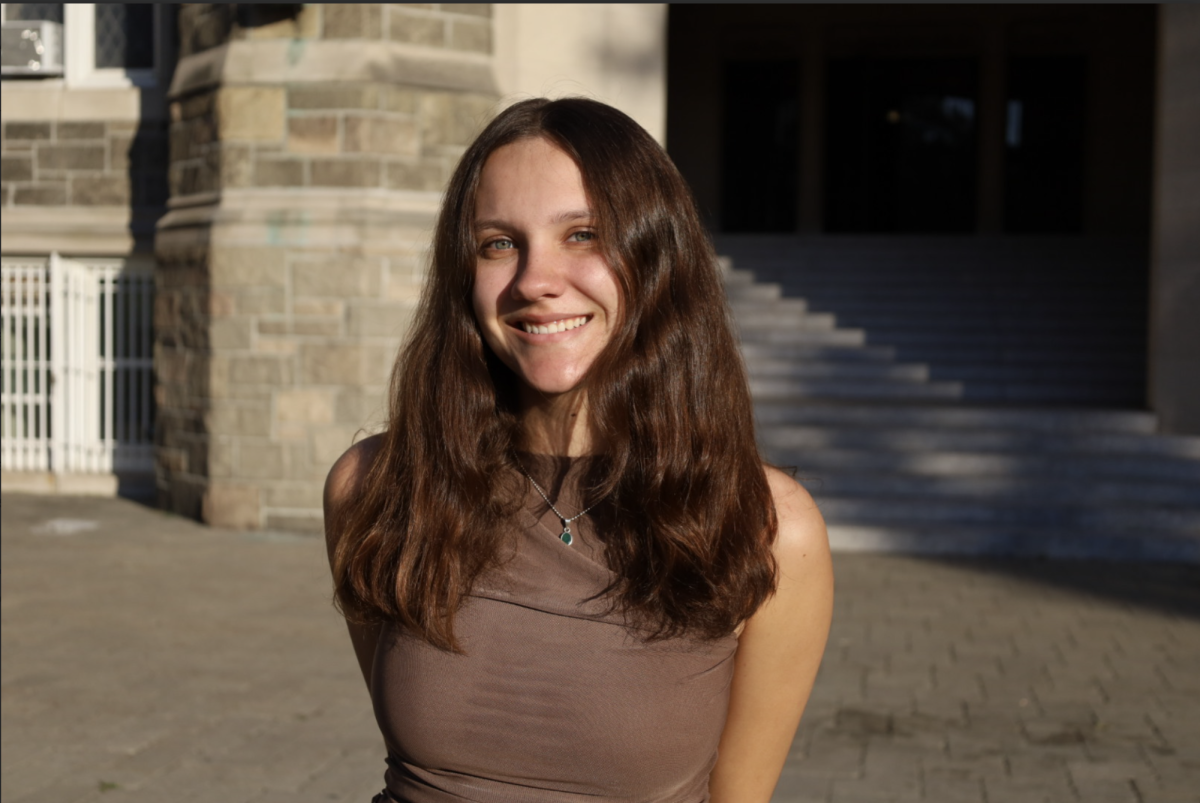

Lusa Holmstrom, FCRH ’25, is an English and Spanish studies major from Venice, Calif.