Artificial Intelligence Unmasks Need for Privacy Protection

Fifteen years ago, it would have seemed ridiculous to worry about a tiny computer stealing your personal information. Today, it is a highly controversial topic.

People of all ages carry powerful technology wherever they go. Children, teens and even grandparents own more electronic devices now than ever before. As a result, artificial intelligence, or AI, has developed rapidly. However, the use of these amazing technologies comes at a price: your identity.

AI researchers at big corporations began working to maximize convenience for their users. The origins of AI include a private passcode and fingerprint scanner as forms of personal identification to secure technology. Recent developments have become increasingly intrusive. For example, facial recognition technology has created an uproar in the name of personal security.

Huge technology companies — including Google, Amazon and Microsoft — need to train their new employees, AI bots, to be able to perform facial recognition in order to remain current in the evolving world of social media. However, the techniques they have been applying to do so are disturbing.

These companies have been illegally taking the faces of unknowing people to fast-track their apps to success. It was recently discovered that photos from the photo website Flickr, surveillance cameras and dating applications have been tapped to aid massive tech companies’ AI research.

The reasonable next step would be to determine if your identity has been in jeopardy, but sadly it is too late for the majority of people; they are unaware of this infringement until they can no longer reclaim their virtual property. Today’s culture promotes the use of self-advertisement through social media, but these sites are unsafe and do not provide clear insight into what personal information could be used for. Stricter laws must be implemented if ruling tech companies want to continue acting unethically.

Large companies’ popular methods to advance AI blatantly contradict the laws currently in place. The Commercial Facial Recognition Privacy Act, a congressional bill passed in March 2019 as part of the General Data Privacy Regulation (GDPR), explicitly states that companies must “obtain explicit affirmative consent from end-users to use such technology after providing notice about the reasonably foreseeable uses of the collected facial-recognition data.”

Not only have few offenders provided information on the whereabouts of users’ identities, but many have also refused to state their intentions for how they will use this information in clear words. Furthermore, few companies ask for permission to use users’ identities in understandable layman’s terms. Rather, the “agreement” they end up providing is laborious and confusing. To conduct a short experiment, I checked the privacy policy agreement for Amazon.

The vernacular used throughout it is vague. The section titled “For What Purposes Does Amazon Use Your Personal Information?” includes a line that states, “When you use our voice, image and camera services, we use your voice input, images, videos, and other personal information to respond to your requests, provide the requested service to you, and improve our services.”

What does it mean by “improve services?” It is unsettling that, by simply asking Amazon’s Alexa or Echo Devices a basic question, a highly influential company has access to a recording of your voice.

That being said, Amazon provides data about how to delete your voice recordings. However, as we all know, once something is on the Internet, it can never be fully deleted. As much as I would like to commend Amazon and other companies for their facade of privacy, I believe their privacy statements are wholly unnecessary and only exist to keep their actions within legal privacy standards.

I am not advocating against the advancement of technology through AI. In fact, AI has provided imaginative solutions to everyday problems, home protection and many other issues. I have not lost hope that more regulations will be passed over time as we continue improving our gadgets. Yet, I believe that additional legislation is the only way we can move forward in an ethical and protected manner.

I hope that the population becomes more aware of the risks they take as consumers. A solution to this would be a heavier emphasis on the privacy policy and agreements for the devices, followed by a statement by the companies on their utmost importance. If the agreements were reworded to be less ambiguous and confusing, I would place less blame on the companies themselves.

Unfortunately, the hold these corporations have on us is unavoidable. Ask yourself — is the convenience of today’s technology worth your identity?

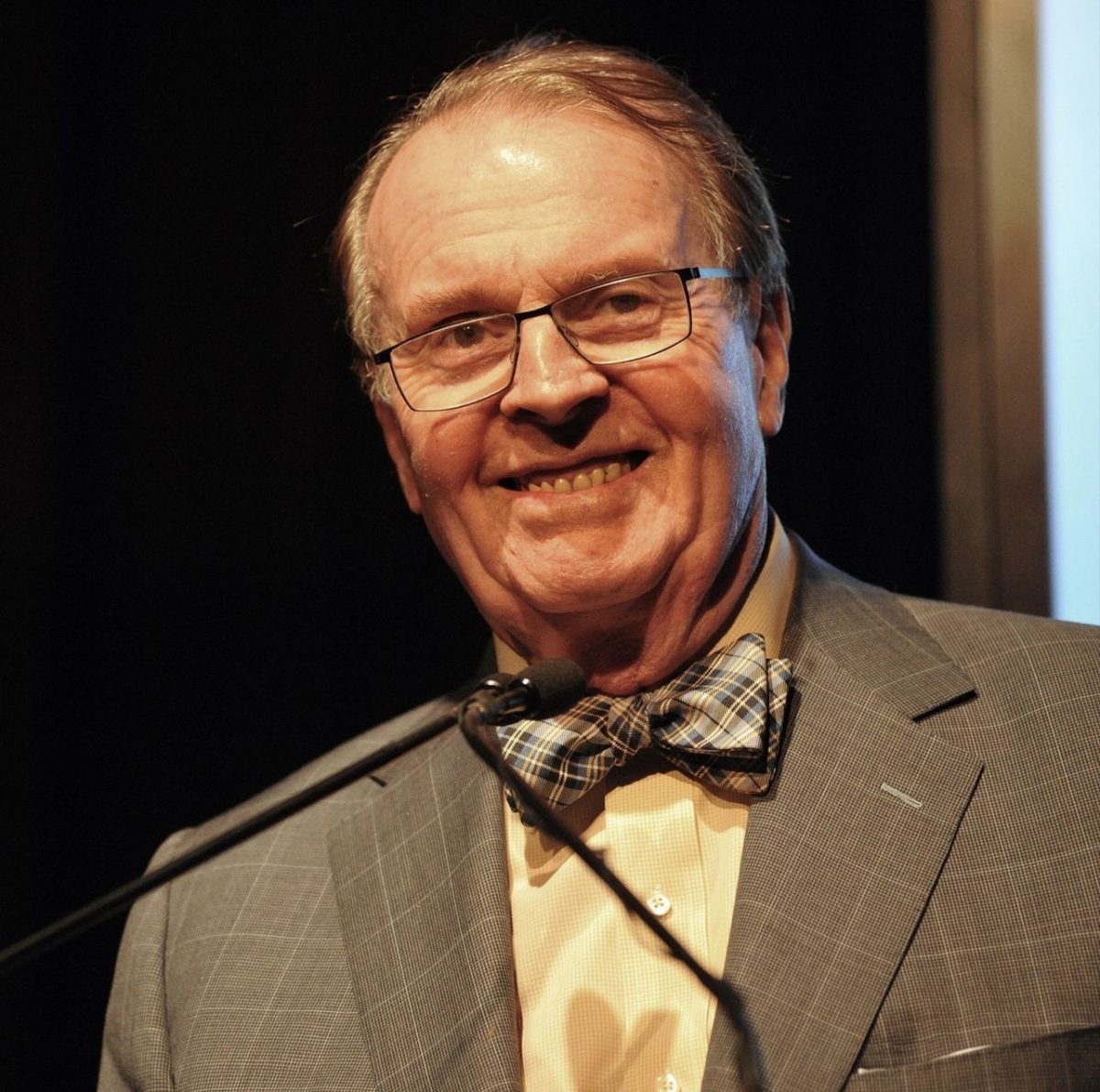

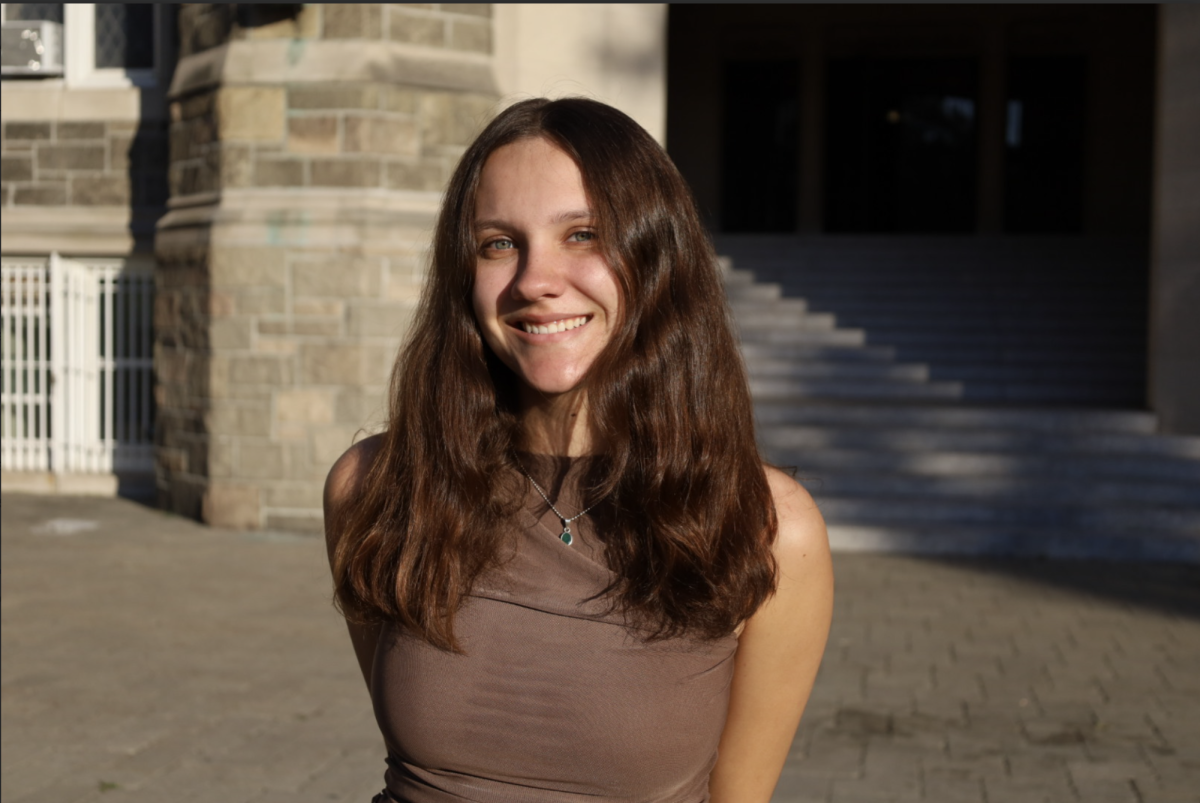

Haley Daniels, FCRH ’23, is a psychology and English major from Hershey, Penn.